import pandas as pd

import numpy as np

import math

import json

import matplotlib.pyplot as plt

import seaborn as sns

from lifetimes.utils import summary_data_from_transaction_data

import datetime

from sklearn.preprocessing import MultiLabelBinarizer

from sklearn.preprocessing import StandardScaler, MinMaxScaler

from sklearn.decomposition import PCA

from sklearn.neighbors import NearestNeighbors

from sklearn.cluster import KMeans

from sklearn.cluster import DBSCAN

from sklearn.cluster import OPTICS

from sklearn.preprocessing import LabelEncoder

from sklearn import metrics

from sklearn.metrics import recall_score

from sklearn.model_selection import cross_val_score

from sklearn.model_selection import RepeatedStratifiedKFold

from sklearn.svm import LinearSVC

from sklearn.discriminant_analysis import LinearDiscriminantAnalysis

from sklearn.ensemble import RandomForestClassifier

from sklearn.ensemble import ExtraTreesClassifier

from sklearn.ensemble import BaggingClassifier

from sklearn.neighbors import KNeighborsClassifier

from sklearn.gaussian_process import GaussianProcessClassifier

from imblearn.over_sampling import SMOTE

from imblearn.pipeline import Pipeline

from sklearn.ensemble import GradientBoostingClassifier, RandomForestClassifier

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score, f1_score

from sklearn.metrics import fbeta_score, make_scorer

from sklearn.model_selection import GridSearchCV, RandomizedSearchCV

from sklearn.model_selection import RandomizedSearchCV

import sklearn.model_selection

from yellowbrick.cluster.elbow import kelbow_visualizerOptimising Starbuck’s rewards program

Lionel Mpofu explores marketing analytics strategies using random forests and gradient boosting models..

Table of Contents

- Introduction

- Data Assessment & Cleaning

- Exploratory Data Analysis

- Data Preprocessing

- Prediction Modeling

Gradient Boosting Classifier

Tuning Parameter Refinement - Conclusion

Starbucks is one of the largest coffehouses in the world. They have been known for having done of the most vigorous digitalisation strategies that has seen them grow to become titans in industry. In the first quarter of 2020, it witnessed a 16pc in year over year growth recording 18.9 million active users. It is infamous for its rewards program. According to starbucks, as of end of 2020, nearly a quarter of their transactions are done through the phone. This means that, a substantial amount of its revenue relies on the rewards app. In this project, we seek to analyse customer behavior of the starbucks rewards app so we can optimise profits by targetted marketing.

This data set contains simulated data that mimics customer behavior on the Starbucks rewards mobile app. Once every few days, Starbucks sends out an offer to users of the mobile app. An offer can be merely an advertisement for a drink or an actual offer such as a discount or BOGO (buy one get one free). Some users might not receive any offer during certain weeks.

Not all users receive the same offer.

The objective is to combine transaction, demographic and offer data to determine which demographic groups respond best to which offer type.

Every offer has a validity period before the offer expires. As an example, a BOGO offer might be valid for only 5 days. Informational offers have a validity period even though these ads are merely providing information about a product; for example, if an informational offer has 7 days of validity, you can assume the customer is feeling the influence of the offer for 7 days after receiving the advertisement.

Starbuks has provided transactional data showing user purchases made on the app including the timestamp of purchase and the amount of money spent on a purchase. This transactional data also has a record for each offer that a user receives as well as a record for when a user actually views the offer. There are also records for when a user completes an offer.

Keep in mind as well that someone using the app might make a purchase through the app without having received an offer or seen an offer.

Example

To give an example, a user could receive a discount offer buy 10 dollars get 2 off on Monday. The offer is valid for 10 days from receipt. If the customer accumulates at least 10 dollars in purchases during the validity period, the customer completes the offer.

However, there are a few things to watch out for in this data set. Customers do not opt into the offers that they receive; in other words, a user can receive an offer, never actually view the offer, and still complete the offer. For example, a user might receive the “buy 10 dollars get 2 dollars off offer”, but the user never opens the offer during the 10 day validity period. The customer spends 15 dollars during those ten days. There will be an offer completion record in the data set; however, the customer was not influenced by the offer because the customer never viewed the offer.

Cleaning

This makes data cleaning especially important and tricky.

You’ll also want to take into account that some demographic groups will make purchases even if they don’t receive an offer. From a business perspective, if a customer is going to make a 10 dollar purchase without an offer anyway, you wouldn’t want to send a buy 10 dollars get 2 dollars off offer. You’ll want to try to assess what a certain demographic group will buy when not receiving any offers.

The “transaction” event does not have any “offer_id” associated to it, so we have to figure out which transactions are connected to particular offer and which ones are not (customer bought something casually).

Informational offer can not be “completed” due to it’s nature, so we need to find a way to connect it with the possible transactions.

Some demographic groups will make purchases regardless of whether they receive an offer. Ideally we would like to group them separately.

A user can complete the offer without actually seeing it. In this case user was making a regular purchase and offer completed automatically. This is not a result of particular marketing campaign but rather a coincidence.

The data is contained in three files:

- portfolio.json - containing offer ids and meta data about each offer (duration, type, etc.)

- profile.json - demographic data for each customer

- transcript.json - records for transactions, offers received, offers viewed, and offers completed

Here is the schema and explanation of each variable in the files:

portfolio.json * id (string) - offer id * offer_type (string) - type of offer ie BOGO, discount, informational * difficulty (int) - minimum required spend to complete an offer * reward (int) - reward given for completing an offer * duration (int) - time for offer to be open, in days * channels (list of strings)

profile.json * age (int) - age of the customer * became_member_on (int) - date when customer created an app account * gender (str) - gender of the customer (note some entries contain ‘O’ for other rather than M or F) * id (str) - customer id * income (float) - customer’s income

transcript.json * event (str) - record description (ie transaction, offer received, offer viewed, etc.) * person (str) - customer id * time (int) - time in hours since start of test. The data begins at time t=0 * value - (dict of strings) - either an offer id or transaction amount depending on the record

Data quality issues: * Null values for “gender” and “income” in profile.json * Age: missing value encoded as 118 in profile.json * Incorrect data format (int64 instead of datetime) in profile.json * Column names can be improved * Incompatible units - offer duration days vs. event time in hours * Different keys in transcript.json - “event id” and “event_id”

Implementation Steps:

- Data exploration

- Data cleaning

- Exploratory data analysis (EDA)

- Customer segmentation

- Exploring the resultant clusters

- Data Preprocessing

- Training the model

- Improving the model

- Choosing the best performing model

- Deploying the best model

- Exploring the prediction results

Read in the dataset

# read in the json files

portfolio = pd.read_json('./data/portfolio.json', orient='records', lines=True)

profile = pd.read_json('./data/profile.json', orient='records', lines=True)

transcript = pd.read_json('./data/transcript.json', orient='records', lines=True)Portfolio data

portfolio.head()[:5]| reward | channels | difficulty | duration | offer_type | id | |

|---|---|---|---|---|---|---|

| 0 | 10 | [email, mobile, social] | 10 | 7 | bogo | ae264e3637204a6fb9bb56bc8210ddfd |

| 1 | 10 | [web, email, mobile, social] | 10 | 5 | bogo | 4d5c57ea9a6940dd891ad53e9dbe8da0 |

| 2 | 0 | [web, email, mobile] | 0 | 4 | informational | 3f207df678b143eea3cee63160fa8bed |

| 3 | 5 | [web, email, mobile] | 5 | 7 | bogo | 9b98b8c7a33c4b65b9aebfe6a799e6d9 |

| 4 | 5 | [web, email] | 20 | 10 | discount | 0b1e1539f2cc45b7b9fa7c272da2e1d7 |

Rename the columns to meaningful names

#rename the column to meaningful names

portfolio.rename(columns={'id':'offer_id'}, inplace=True)

portfolio.rename(columns={'duration': 'offer_duration_days'}, inplace=True)

portfolio.rename(columns={'difficulty': 'spent_required'}, inplace=True)

portfolio.rename(columns={'reward': 'possible_reward'}, inplace=True)Check for null values

#check for null values

portfolio.isnull().sum()possible_reward 0

channels 0

spent_required 0

offer_duration_days 0

offer_type 0

offer_id 0

dtype: int64There are no missing values.

Extract data from the channels column

# create dummy variables for channels

portfolio_clean = portfolio.copy() # Create dummy columns for the channels column

d_chann = pd.get_dummies(portfolio_clean.channels.apply(pd.Series).stack(),

prefix="channel").sum(level=0) portfolio_clean = pd.concat([portfolio_clean, d_chann], axis=1, sort=False) portfolio_clean.drop(columns='channels', inplace=True) portfolio_clean| possible_reward | spent_required | offer_duration_days | offer_type | offer_id | channel_email | channel_mobile | channel_social | channel_web | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 10 | 10 | 7 | bogo | ae264e3637204a6fb9bb56bc8210ddfd | 1 | 1 | 1 | 0 |

| 1 | 10 | 10 | 5 | bogo | 4d5c57ea9a6940dd891ad53e9dbe8da0 | 1 | 1 | 1 | 1 |

| 2 | 0 | 0 | 4 | informational | 3f207df678b143eea3cee63160fa8bed | 1 | 1 | 0 | 1 |

| 3 | 5 | 5 | 7 | bogo | 9b98b8c7a33c4b65b9aebfe6a799e6d9 | 1 | 1 | 0 | 1 |

| 4 | 5 | 20 | 10 | discount | 0b1e1539f2cc45b7b9fa7c272da2e1d7 | 1 | 0 | 0 | 1 |

| 5 | 3 | 7 | 7 | discount | 2298d6c36e964ae4a3e7e9706d1fb8c2 | 1 | 1 | 1 | 1 |

| 6 | 2 | 10 | 10 | discount | fafdcd668e3743c1bb461111dcafc2a4 | 1 | 1 | 1 | 1 |

| 7 | 0 | 0 | 3 | informational | 5a8bc65990b245e5a138643cd4eb9837 | 1 | 1 | 1 | 0 |

| 8 | 5 | 5 | 5 | bogo | f19421c1d4aa40978ebb69ca19b0e20d | 1 | 1 | 1 | 1 |

| 9 | 2 | 10 | 7 | discount | 2906b810c7d4411798c6938adc9daaa5 | 1 | 1 | 0 | 1 |

We will use the data for modelling, so we save a copy of the portfolio dataframe here

portfolio_for_modelling=portfolio_clean.copy()There are 10 offers distinguished by offer_ids, in 3 categories bogo, discount and informational

portfolio_clean[['offer_type','offer_id']]| offer_type | offer_id | |

|---|---|---|

| 0 | bogo | ae264e3637204a6fb9bb56bc8210ddfd |

| 1 | bogo | 4d5c57ea9a6940dd891ad53e9dbe8da0 |

| 2 | informational | 3f207df678b143eea3cee63160fa8bed |

| 3 | bogo | 9b98b8c7a33c4b65b9aebfe6a799e6d9 |

| 4 | discount | 0b1e1539f2cc45b7b9fa7c272da2e1d7 |

| 5 | discount | 2298d6c36e964ae4a3e7e9706d1fb8c2 |

| 6 | discount | fafdcd668e3743c1bb461111dcafc2a4 |

| 7 | informational | 5a8bc65990b245e5a138643cd4eb9837 |

| 8 | bogo | f19421c1d4aa40978ebb69ca19b0e20d |

| 9 | discount | 2906b810c7d4411798c6938adc9daaa5 |

We will also merge some dataframe for some analysis, so we save this data here

portfolio_fr_df2=portfolio_clean.copy()Encode the offer types

portfolio_clean.loc[portfolio_clean['offer_type'] == 'bogo', 'offer_type'] = 1

portfolio_clean.loc[portfolio_clean['offer_type'] == 'discount', 'offer_type'] = 2

portfolio_clean.loc[portfolio_clean['offer_type'] == 'informational', 'offer_type'] = 3Profile data

profile = pd.read_json('./profile.json', orient='records', lines=True)profile[:5]| gender | age | id | became_member_on | income | |

|---|---|---|---|---|---|

| 0 | None | 118 | 68be06ca386d4c31939f3a4f0e3dd783 | 20170212 | NaN |

| 1 | F | 55 | 0610b486422d4921ae7d2bf64640c50b | 20170715 | 112000.0 |

| 2 | None | 118 | 38fe809add3b4fcf9315a9694bb96ff5 | 20180712 | NaN |

| 3 | F | 75 | 78afa995795e4d85b5d9ceeca43f5fef | 20170509 | 100000.0 |

| 4 | None | 118 | a03223e636434f42ac4c3df47e8bac43 | 20170804 | NaN |

profile.shape(17000, 5)Check for null values

profile.isnull().sum()[profile.isnull().sum()>0]gender 2175

income 2175

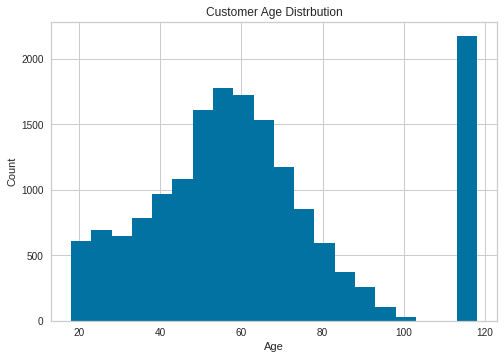

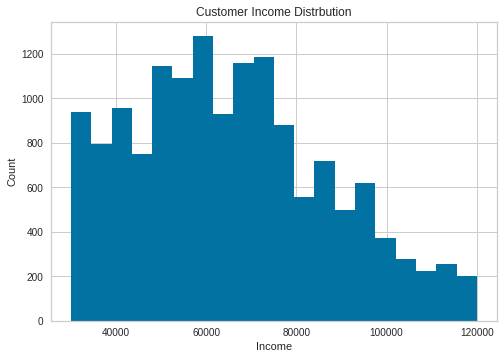

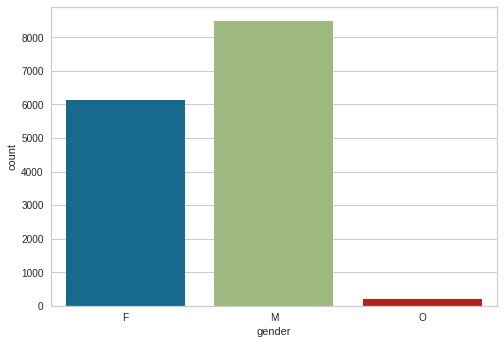

dtype: int64Display the customer profile distribution for age,income and gender

def plot_hist(data, title, xlabel, ylabel, bins=20):

"""Plot a histogram with values passed as input."""

plt.hist(data, bins=bins)

plt.title(title)

plt.xlabel(xlabel)

plt.ylabel(ylabel)

plt.show()def display_customer_profile():

'''Display customer profile with histograms'''

# Display Histogram of Customer Age

plot_hist(data=profile['age'], title='Customer Age Distrbution', xlabel='Age', ylabel='Count', bins=20)

# Display Histogram of Customer Income

plot_hist(data=profile['income'], title='Customer Income Distrbution', xlabel='Income', ylabel='Count', bins=20)

sns.countplot(profile['gender'])display_customer_profile()

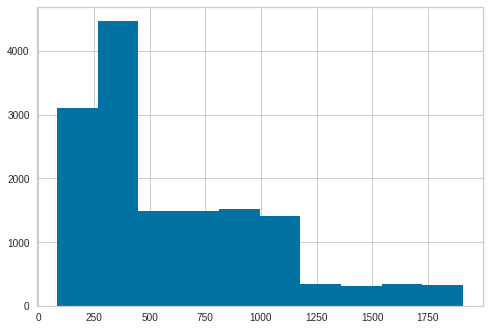

The customer age distribution has some outliers, ages 100-118. It is improbable that starbucks would have app users this age. Income is left skewed, most people earn between 40k and 80k. Gender distribution has males highest followed by females and a few O

profile[profile.age==118].count()gender 0

age 2175

id 2175

became_member_on 2175

income 0

dtype: int64profile=profile.drop(profile[profile['gender'].isnull()].index)There are 2175 customers with age 118. Obviously this cant be true. But given that this data was taken in 2018, 2018-118 is 1900 which was probably the default date on the app. 2175 is 12% of our data, dropping these from our analysis and modelling would greatly affect the model since these users made transactions and participated in the experiment.

Replace values with age 118 with nan values

profile.age.replace(118, np.nan, inplace=True)Check the datatypes in the profiles data

profile.dtypesgender object

age int64

id object

became_member_on int64

income float64

dtype: objectChange the became_member_on from int to datetime

profile['became_member_on'] = pd.to_datetime(profile['became_member_on'], format='%Y%m%d')

profile['year'] = profile['became_member_on'].dt.year

profile['quarter'] = profile['became_member_on'].dt.quarterRename column name into meaningful name

profile.rename(columns={'id': 'customer_id'}, inplace=True)profile[:5]| gender | age | customer_id | became_member_on | income | year | quarter | |

|---|---|---|---|---|---|---|---|

| 1 | F | 55 | 0610b486422d4921ae7d2bf64640c50b | 2017-07-15 | 112000.0 | 2017 | 3 |

| 3 | F | 75 | 78afa995795e4d85b5d9ceeca43f5fef | 2017-05-09 | 100000.0 | 2017 | 2 |

| 5 | M | 68 | e2127556f4f64592b11af22de27a7932 | 2018-04-26 | 70000.0 | 2018 | 2 |

| 8 | M | 65 | 389bc3fa690240e798340f5a15918d5c | 2018-02-09 | 53000.0 | 2018 | 1 |

| 12 | M | 58 | 2eeac8d8feae4a8cad5a6af0499a211d | 2017-11-11 | 51000.0 | 2017 | 4 |

Create new column “days_of_membership”

Create a new column days of membership to calculate how long someone has been a member. Maybe that will have an effect as well into how they spend and how they respond to offers. To do this, we need to know when this data was collected. This we dont have bu we can make an assumption. The last date on this dataset will be used and this is - October 18, 2018.

import datetime

data_collection_date = "2018-10-18"

profile['days_of_membership'] = datetime.datetime.strptime(data_collection_date, '%Y-%m-%d') - profile['became_member_on']

profile['days_of_membership'] = profile['days_of_membership'].dt.days.astype('int16')profile[:5]| gender | age | customer_id | became_member_on | income | year | quarter | days_of_membership | |

|---|---|---|---|---|---|---|---|---|

| 1 | F | 55 | 0610b486422d4921ae7d2bf64640c50b | 2017-07-15 | 112000.0 | 2017 | 3 | 460 |

| 3 | F | 75 | 78afa995795e4d85b5d9ceeca43f5fef | 2017-05-09 | 100000.0 | 2017 | 2 | 527 |

| 5 | M | 68 | e2127556f4f64592b11af22de27a7932 | 2018-04-26 | 70000.0 | 2018 | 2 | 175 |

| 8 | M | 65 | 389bc3fa690240e798340f5a15918d5c | 2018-02-09 | 53000.0 | 2018 | 1 | 251 |

| 12 | M | 58 | 2eeac8d8feae4a8cad5a6af0499a211d | 2017-11-11 | 51000.0 | 2017 | 4 | 341 |

Save the profile data for modelling later

profile_for_modelling=profile.copy()Create an age range column

From the age distribution we saw above, we can group into these categories

profile['age'].hist()<AxesSubplot:>

Create bins for teen, young adult, adult and elderly. 17-22 teen, 22-35 young adult, 35-60 adult, 60-103 elderly

profile['age_group']=pd.cut(profile['age'],bins=[17,22,35,60,103],labels=['teenager','young-adult','adult','elderly'])x=profile['age'].unique()Create a list of ages as categories so that we encode them

x=profile['age_group'].astype('category').cat.categories.tolist()Encode age group as 1-4 to represent the age groups

y={"age_group": {k:v for k,v in zip(x,list(range(1,len(x)+1)))}}Replace the categories with encoded values

profile.replace(y,inplace=True)Drop the age column

profile.drop(columns="age",inplace=True)Create a new income range column

Find the income distribution to come up with bins

profile['income'].describe()count 14825.000000

mean 65404.991568

std 21598.299410

min 30000.000000

25% 49000.000000

50% 64000.000000

75% 80000.000000

max 120000.000000

Name: income, dtype: float64From the distribution we see that the min is 30k and the max is 120k, the average is 65k with a standard deviation of 20k

Create new column income range

profile['income_range']=pd.cut(profile['income'],bins=[0,30000,50001,70001,90001,120001],labels=['low','low_to_mid','mid','mid_to_high','high'])profile['income_range'].value_counts()mid 5006

low_to_mid 3946

mid_to_high 3591

high 2194

low 88

Name: income_range, dtype: int64profile[:5]| gender | customer_id | became_member_on | income | year | quarter | days_of_membership | age_group | income_range | |

|---|---|---|---|---|---|---|---|---|---|

| 1 | F | 0610b486422d4921ae7d2bf64640c50b | 2017-07-15 | 112000.0 | 2017 | 3 | 460 | 3 | high |

| 3 | F | 78afa995795e4d85b5d9ceeca43f5fef | 2017-05-09 | 100000.0 | 2017 | 2 | 527 | 4 | high |

| 5 | M | e2127556f4f64592b11af22de27a7932 | 2018-04-26 | 70000.0 | 2018 | 2 | 175 | 4 | mid |

| 8 | M | 389bc3fa690240e798340f5a15918d5c | 2018-02-09 | 53000.0 | 2018 | 1 | 251 | 4 | mid |

| 12 | M | 2eeac8d8feae4a8cad5a6af0499a211d | 2017-11-11 | 51000.0 | 2017 | 4 | 341 | 3 | mid |

Encode the income categories

labels=profile['income_range'].astype('category').cat.categories.tolist()b={'income_range':{k:v for k,v in zip(labels,list(range(1,len(labels)+1)))}}profile.replace(b,inplace=True)Drop the income column since we have the income range

profile.drop(columns="income",inplace=True)Create a loyalty column based on membership

We also want to be able to categorise members as new, regular and or loyal, to aid our analysis

Let’s check days of membership distribution

profile['days_of_membership'].describe()count 14825.000000

mean 606.478988

std 419.205158

min 84.000000

25% 292.000000

50% 442.000000

75% 881.000000

max 1907.000000

Name: days_of_membership, dtype: float64profile['days_of_membership'].hist()<AxesSubplot:>

Create bins for the different member types as new, regular and loyal for days of membership between 85 (the minimum) and 1908 (the maximum)

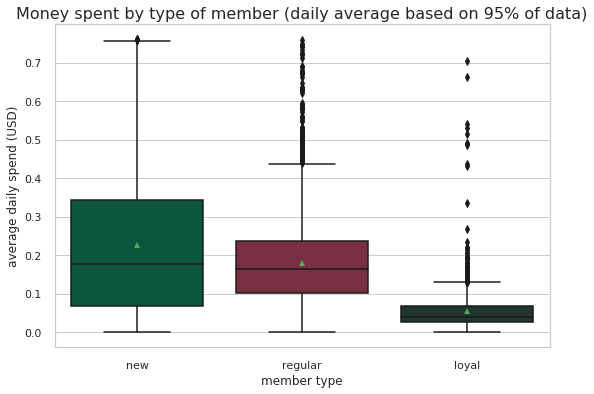

profile['member_type']=pd.cut(profile['days_of_membership'],bins=[84,650,1200,1908],labels=['new','regular','loyal'])profile['member_type'].value_counts()new 9215

regular 4293

loyal 1296

Name: member_type, dtype: int64Encode the member types as categorical variables

x=profile['member_type'].astype('category').cat.categories.tolist()y={'member_type':{k:v for k,v in zip(x,list(range(1,len(x)+1)))}}profile.replace(y,inplace=True)profile[:5]| gender | customer_id | became_member_on | year | quarter | days_of_membership | age_group | income_range | member_type | |

|---|---|---|---|---|---|---|---|---|---|

| 1 | F | 0610b486422d4921ae7d2bf64640c50b | 2017-07-15 | 2017 | 3 | 460 | 3 | 5 | 1.0 |

| 3 | F | 78afa995795e4d85b5d9ceeca43f5fef | 2017-05-09 | 2017 | 2 | 527 | 4 | 5 | 1.0 |

| 5 | M | e2127556f4f64592b11af22de27a7932 | 2018-04-26 | 2018 | 2 | 175 | 4 | 3 | 1.0 |

| 8 | M | 389bc3fa690240e798340f5a15918d5c | 2018-02-09 | 2018 | 1 | 251 | 4 | 3 | 1.0 |

| 12 | M | 2eeac8d8feae4a8cad5a6af0499a211d | 2017-11-11 | 2017 | 4 | 341 | 3 | 3 | 1.0 |

Drop the days of membership and when customer became a member since we membership type

profile.drop(columns=['days_of_membership','became_member_on'],inplace=True)profile[:5]| gender | customer_id | year | quarter | age_group | income_range | member_type | |

|---|---|---|---|---|---|---|---|

| 1 | F | 0610b486422d4921ae7d2bf64640c50b | 2017 | 3 | 3 | 5 | 1.0 |

| 3 | F | 78afa995795e4d85b5d9ceeca43f5fef | 2017 | 2 | 4 | 5 | 1.0 |

| 5 | M | e2127556f4f64592b11af22de27a7932 | 2018 | 2 | 4 | 3 | 1.0 |

| 8 | M | 389bc3fa690240e798340f5a15918d5c | 2018 | 1 | 4 | 3 | 1.0 |

| 12 | M | 2eeac8d8feae4a8cad5a6af0499a211d | 2017 | 4 | 3 | 3 | 1.0 |

Check for duplicates

profile.duplicated().sum()0Save the profile data for exploratory analysis

profile_fr_df2=profile.copy()Transcript data

transcript[:5]| person | event | value | time | |

|---|---|---|---|---|

| 0 | 78afa995795e4d85b5d9ceeca43f5fef | offer received | {'offer id': '9b98b8c7a33c4b65b9aebfe6a799e6d9'} | 0 |

| 1 | a03223e636434f42ac4c3df47e8bac43 | offer received | {'offer id': '0b1e1539f2cc45b7b9fa7c272da2e1d7'} | 0 |

| 2 | e2127556f4f64592b11af22de27a7932 | offer received | {'offer id': '2906b810c7d4411798c6938adc9daaa5'} | 0 |

| 3 | 8ec6ce2a7e7949b1bf142def7d0e0586 | offer received | {'offer id': 'fafdcd668e3743c1bb461111dcafc2a4'} | 0 |

| 4 | 68617ca6246f4fbc85e91a2a49552598 | offer received | {'offer id': '4d5c57ea9a6940dd891ad53e9dbe8da0'} | 0 |

transcript['event'].value_counts()transaction 138953

offer received 76277

offer viewed 57725

offer completed 33579

Name: event, dtype: int64transcript.shape(306534, 4)Split the value column into columns, this method takes time for a large dataset

%%time

cleaned_transcript=pd.concat([transcript.drop(['value'], axis=1), transcript['value'].apply(pd.Series)], axis=1)CPU times: user 2min 25s, sys: 2.52 s, total: 2min 28s

Wall time: 2min 30scleaned_transcript[:5]| person | event | time | offer id | amount | offer_id | reward | |

|---|---|---|---|---|---|---|---|

| 0 | 78afa995795e4d85b5d9ceeca43f5fef | offer received | 0 | 9b98b8c7a33c4b65b9aebfe6a799e6d9 | NaN | NaN | NaN |

| 1 | a03223e636434f42ac4c3df47e8bac43 | offer received | 0 | 0b1e1539f2cc45b7b9fa7c272da2e1d7 | NaN | NaN | NaN |

| 2 | e2127556f4f64592b11af22de27a7932 | offer received | 0 | 2906b810c7d4411798c6938adc9daaa5 | NaN | NaN | NaN |

| 3 | 8ec6ce2a7e7949b1bf142def7d0e0586 | offer received | 0 | fafdcd668e3743c1bb461111dcafc2a4 | NaN | NaN | NaN |

| 4 | 68617ca6246f4fbc85e91a2a49552598 | offer received | 0 | 4d5c57ea9a6940dd891ad53e9dbe8da0 | NaN | NaN | NaN |

We have two columns offer_id, Assign values of offer id column to offer_id column to remove duplicate columns

cleaned_transcript['offer_id'] = cleaned_transcript['offer_id'].fillna(cleaned_transcript['offer id'])cleaned_transcript.drop(['offer id'], axis=1, inplace=True)Rename the columns to something more meaningful

cleaned_transcript.rename(columns={'person': 'customer_id'}, inplace=True)

cleaned_transcript.rename(columns={'amount': 'money_spent'}, inplace=True)

cleaned_transcript.rename(columns={'reward': 'money_gained'}, inplace=True)Offer amount and reward with null values will be assigned as zeros

cleaned_transcript['money_spent'] = cleaned_transcript['money_spent'].replace(np.nan, 0)

cleaned_transcript['money_gained'] = cleaned_transcript['money_gained'].replace(np.nan, 0)Change time column from hours to days

cleaned_transcript['time'] = cleaned_transcript['time']/24.0cleaned_transcript[:5]| customer_id | event | time | money_spent | offer_id | money_gained | |

|---|---|---|---|---|---|---|

| 0 | 78afa995795e4d85b5d9ceeca43f5fef | offer received | 0.0 | 0.0 | 9b98b8c7a33c4b65b9aebfe6a799e6d9 | 0.0 |

| 1 | a03223e636434f42ac4c3df47e8bac43 | offer received | 0.0 | 0.0 | 0b1e1539f2cc45b7b9fa7c272da2e1d7 | 0.0 |

| 2 | e2127556f4f64592b11af22de27a7932 | offer received | 0.0 | 0.0 | 2906b810c7d4411798c6938adc9daaa5 | 0.0 |

| 3 | 8ec6ce2a7e7949b1bf142def7d0e0586 | offer received | 0.0 | 0.0 | fafdcd668e3743c1bb461111dcafc2a4 | 0.0 |

| 4 | 68617ca6246f4fbc85e91a2a49552598 | offer received | 0.0 | 0.0 | 4d5c57ea9a6940dd891ad53e9dbe8da0 | 0.0 |

We only want to analyze the customers whose profile we have so we have to drop customers who have transaction data but do not have profile data.

# Drop customers ids that not available in the profile dataset, because we know nothing about them

cleaned_transcript = cleaned_transcript[cleaned_transcript['customer_id'].isin(profile['customer_id'])]One-hot encode events

transcript_dummies = pd.get_dummies(cleaned_transcript.event)

cleaned_transcript = pd.concat([cleaned_transcript, transcript_dummies], axis=1)Creating a copy for some exploratory analysis

transcript_fr_df2=cleaned_transcript.copy()Taking a look at the events and time columns, it seems like events are ordered according to how much time and not according to whether the offer received, resulted in offer being completed/transaction done

Further cleaning on transcript/ transaction data

Combine the offer completed and transanction done into 1 row

We want to be able to know when an offer was completed the transaction was done, so we combine the rows for offer completed and transaction done into one row

%%time

print("Total rows: {}".format(len(cleaned_transcript)))

rows_to_drop = []

# Loop over "offer completed" events:

c_df = cleaned_transcript[cleaned_transcript.event == "offer completed"]

print('Total "offer completed" rows: {}'.format(len(c_df)))

for c_index, row in c_df.iterrows():

t_index = c_index-1 # transaction row index

cleaned_transcript.at[c_index, 'money_spent'] = cleaned_transcript.at[t_index, 'money_spent'] # update "amount"

cleaned_transcript.at[c_index, 'transaction'] = 1

rows_to_drop.append(t_index) # we will drop the "transaction" row

print("\nRows to drop: {}".format(len(rows_to_drop)))

cleaned_transcript = cleaned_transcript[~cleaned_transcript.index.isin(rows_to_drop)] # faster alternative to dropping rows one by one inside the loop

print("Rows after job is finished: {}".format(len(cleaned_transcript)))

cleaned_transcript[cleaned_transcript.event == "offer completed"].sample(3)Total rows: 272762

Total "offer completed" rows: 32444

Rows to drop: 32444

Rows after job is finished: 240318

CPU times: user 5.85 s, sys: 2.65 ms, total: 5.85 s

Wall time: 5.99 s| customer_id | event | time | money_spent | offer_id | money_gained | offer completed | offer received | offer viewed | transaction | |

|---|---|---|---|---|---|---|---|---|---|---|

| 259403 | a206d7f1c7124bd0b16dd13e7932592e | offer completed | 24.00 | 11.20 | f19421c1d4aa40978ebb69ca19b0e20d | 5.0 | 1 | 0 | 0 | 1 |

| 230473 | 3f244f4dea654688ace14acb4f0257bb | offer completed | 22.25 | 3.63 | 2298d6c36e964ae4a3e7e9706d1fb8c2 | 3.0 | 1 | 0 | 0 | 1 |

| 225697 | de035e26f1c34866984482cf5c2c3e36 | offer completed | 21.75 | 18.81 | ae264e3637204a6fb9bb56bc8210ddfd | 10.0 | 1 | 0 | 0 | 1 |

Combine offer viewed and offer completed

We also want to know those who viewed and completed the offer and those who received and did not complete the offer

%%time

### TIME-CONSUMING CELL! DO NOT RE-RUN IT UNLESS NECESSARY. ###

print("Total rows: {}".format(len(cleaned_transcript)))

print('Total "offer viewed" rows: {}'.format(len(cleaned_transcript[cleaned_transcript.event == "offer completed"])))

rows_to_drop = []

# Loop over "offer completed" events:

c_df = cleaned_transcript[cleaned_transcript.event == "offer completed"]

num_rows = len(c_df)

for c_index, row in c_df.iterrows():

person = c_df.at[c_index, 'customer_id'] # save customer id

offer = c_df.at[c_index, 'offer_id'] # save offer id

# Check if there is an "offer viewed" row before "offer completed":

prev_rows = cleaned_transcript[:c_index]

idx_found = prev_rows[(prev_rows.event == "offer viewed")

& (prev_rows.customer_id == person)

& (prev_rows.offer_id == offer)].index.tolist()

if len(idx_found) > 0:

print("Updating row nr. " + str(c_index)

+ " "*100, end="\r") # erase output and print on the same line

cleaned_transcript.at[c_index, 'offer viewed'] = 1

for v_index in idx_found:

rows_to_drop.append(v_index) # we will drop the "offer viewed" row

print("\nRows to drop: {}".format(len(rows_to_drop)))

cleaned_transcript = cleaned_transcript[~cleaned_transcript.index.isin(rows_to_drop)] # faster alternative to dropping rows one by one inside the loop

print("Rows after job is finished: {}".format(len(cleaned_transcript)))

cleaned_transcript[cleaned_transcript.event == "offer completed"].sample(3)Total rows: 240318

Total "offer viewed" rows: 29581

Updating row nr. 306527

Rows to drop: 29634

Rows after job is finished: 214604

CPU times: user 25min 35s, sys: 8.41 s, total: 25min 43s

Wall time: 25min 35s| customer_id | event | time | money_spent | offer_id | money_gained | offer completed | offer received | offer viewed | transaction | |

|---|---|---|---|---|---|---|---|---|---|---|

| 124407 | 89df998636e744a981d7c907e778bea7 | offer completed | 14.0 | 10.97 | ae264e3637204a6fb9bb56bc8210ddfd | 10.0 | 1 | 0 | 1 | 1 |

| 272854 | 04fad15f96d446f483823ad08857e752 | offer completed | 25.0 | 25.85 | 2906b810c7d4411798c6938adc9daaa5 | 2.0 | 1 | 0 | 0 | 1 |

| 124865 | 7ffb3da7ed254a35bea25b35d40c47b9 | offer completed | 14.0 | 16.12 | 2906b810c7d4411798c6938adc9daaa5 | 2.0 | 1 | 0 | 0 | 1 |

The above method takes time, so to ensure that we dont run it often unless we need to, we are going to save this checkpoint by saving this df to csv. So that we save it as a csv file

cleaned_transcript[:5]| customer_id | event | time | money_spent | offer_id | money_gained | offer completed | offer received | offer viewed | transaction | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 78afa995795e4d85b5d9ceeca43f5fef | offer received | 0.0 | 0.0 | 9b98b8c7a33c4b65b9aebfe6a799e6d9 | 0.0 | 0 | 1 | 0 | 0 |

| 2 | e2127556f4f64592b11af22de27a7932 | offer received | 0.0 | 0.0 | 2906b810c7d4411798c6938adc9daaa5 | 0.0 | 0 | 1 | 0 | 0 |

| 5 | 389bc3fa690240e798340f5a15918d5c | offer received | 0.0 | 0.0 | f19421c1d4aa40978ebb69ca19b0e20d | 0.0 | 0 | 1 | 0 | 0 |

| 7 | 2eeac8d8feae4a8cad5a6af0499a211d | offer received | 0.0 | 0.0 | 3f207df678b143eea3cee63160fa8bed | 0.0 | 0 | 1 | 0 | 0 |

| 8 | aa4862eba776480b8bb9c68455b8c2e1 | offer received | 0.0 | 0.0 | 0b1e1539f2cc45b7b9fa7c272da2e1d7 | 0.0 | 0 | 1 | 0 | 0 |

Rename the offer columns by putting a _ so that we can easily index them

cleaned_transcript.rename(columns= {'offer completed': 'offer_completed'}, inplace=True)

cleaned_transcript.rename(columns={'offer received': 'offer_received'}, inplace=True)

cleaned_transcript.rename(columns={'offer viewed': 'offer_viewed'}, inplace=True)How many offers were viewed and completed

print("Total offers viewed and completed: {}".format(len(cleaned_transcript[(cleaned_transcript.event == "offer completed")

& (cleaned_transcript.offer_viewed == 1)])))Total offers viewed and completed: 24949How many offers were completed without being viewed

print("Total offers completed but NOT viewed: {}".format(len(cleaned_transcript[(cleaned_transcript.event == "offer completed")

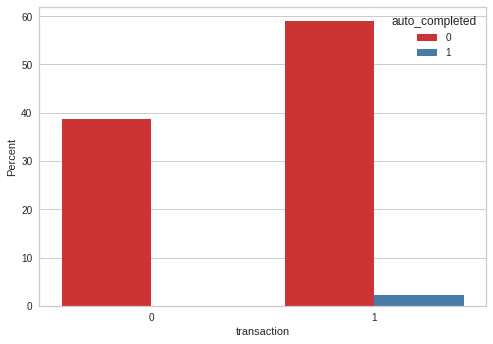

& (cleaned_transcript.offer_viewed == 0)])))Total offers completed but NOT viewed: 4632Make new event category for offers completed by accident: “auto completed”

There are customers who dont receive the offer but complete it and others who receive the offer, dont view it but complete it

%%time

for index, row in cleaned_transcript.loc[(cleaned_transcript.event == "offer completed") & (cleaned_transcript.offer_viewed == 0)].iterrows():

cleaned_transcript.at[index, 'event'] = "auto completed"

cleaned_transcript.at[index, 'offer_completed'] = 0

# Also add new numerical column:

cleaned_transcript['auto_completed'] = np.where(cleaned_transcript['event']=='auto completed', 1, 0)CPU times: user 783 ms, sys: 4.02 ms, total: 787 ms

Wall time: 1.02 scleaned_transcript['auto_completed'].value_counts()0 209972

1 4632

Name: auto_completed, dtype: int64Only 2.2% of customers auto completed the offers

Check if the function worked, if function worked, it should return len 0

len(cleaned_transcript[(cleaned_transcript.event == "offer_completed") & (cleaned_transcript.offer_viewed == 0)])0Is it possible for a customer to receive the same offer multiple times? Lets pick an offer ID and customer ID and test this

cleaned_transcript[(cleaned_transcript.customer_id == "fffad4f4828548d1b5583907f2e9906b") & (cleaned_transcript.offer_id == "f19421c1d4aa40978ebb69ca19b0e20d")]| customer_id | event | time | money_spent | offer_id | money_gained | offer_completed | offer_received | offer_viewed | transaction | auto_completed | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 184 | fffad4f4828548d1b5583907f2e9906b | offer received | 0.0 | 0.00 | f19421c1d4aa40978ebb69ca19b0e20d | 0.0 | 0 | 1 | 0 | 0 | 0 |

| 26145 | fffad4f4828548d1b5583907f2e9906b | offer completed | 1.5 | 6.97 | f19421c1d4aa40978ebb69ca19b0e20d | 5.0 | 1 | 0 | 1 | 1 | 0 |

| 150794 | fffad4f4828548d1b5583907f2e9906b | offer received | 17.0 | 0.00 | f19421c1d4aa40978ebb69ca19b0e20d | 0.0 | 0 | 1 | 0 | 0 | 0 |

| 221937 | fffad4f4828548d1b5583907f2e9906b | offer completed | 21.5 | 12.18 | f19421c1d4aa40978ebb69ca19b0e20d | 5.0 | 1 | 0 | 1 | 1 | 0 |

Apparently a customer can receive the same offer multiple times, so we should factor this in when doing our EDA

transcript_checkpoint=pd.read_csv('./transcript_checkpoint_file.csv')transcript_checkpoint| Unnamed: 0 | customer_id | event | time | money_spent | offer_id | money_gained | offer_completed | offer_received | offer_viewed | transaction | auto_completed | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 78afa995795e4d85b5d9ceeca43f5fef | offer received | 0.00 | 0.00 | 9b98b8c7a33c4b65b9aebfe6a799e6d9 | 0.0 | 0 | 1 | 0 | 0 | 0 |

| 1 | 2 | e2127556f4f64592b11af22de27a7932 | offer received | 0.00 | 0.00 | 2906b810c7d4411798c6938adc9daaa5 | 0.0 | 0 | 1 | 0 | 0 | 0 |

| 2 | 5 | 389bc3fa690240e798340f5a15918d5c | offer received | 0.00 | 0.00 | f19421c1d4aa40978ebb69ca19b0e20d | 0.0 | 0 | 1 | 0 | 0 | 0 |

| 3 | 7 | 2eeac8d8feae4a8cad5a6af0499a211d | offer received | 0.00 | 0.00 | 3f207df678b143eea3cee63160fa8bed | 0.0 | 0 | 1 | 0 | 0 | 0 |

| 4 | 8 | aa4862eba776480b8bb9c68455b8c2e1 | offer received | 0.00 | 0.00 | 0b1e1539f2cc45b7b9fa7c272da2e1d7 | 0.0 | 0 | 1 | 0 | 0 | 0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 214599 | 306527 | 24f56b5e1849462093931b164eb803b5 | offer completed | 29.75 | 22.64 | fafdcd668e3743c1bb461111dcafc2a4 | 2.0 | 1 | 0 | 1 | 1 | 0 |

| 214600 | 306529 | b3a1272bc9904337b331bf348c3e8c17 | transaction | 29.75 | 1.59 | NaN | 0.0 | 0 | 0 | 0 | 1 | 0 |

| 214601 | 306530 | 68213b08d99a4ae1b0dcb72aebd9aa35 | transaction | 29.75 | 9.53 | NaN | 0.0 | 0 | 0 | 0 | 1 | 0 |

| 214602 | 306531 | a00058cf10334a308c68e7631c529907 | transaction | 29.75 | 3.61 | NaN | 0.0 | 0 | 0 | 0 | 1 | 0 |

| 214603 | 306532 | 76ddbd6576844afe811f1a3c0fbb5bec | transaction | 29.75 | 3.53 | NaN | 0.0 | 0 | 0 | 0 | 1 | 0 |

214604 rows × 12 columns

## 3. Exploratory Data Analysis

First we save the cleaned data from all the datasets for ease of access

Create a new dataframe for some demographic analysis

Build a dataframe with aggregated transaction, offer, and demographics data for customer behavior analysis. As we saw earlier, the same customer can receive the same offer multiple times, they can also receive other offers of the same type or of different types. We will build a new dataframe by: 1. Getting the offer type data per customer 2. Getting offer id data per customer 3. Building a dataframe by merging extracted variables, demographics, offers, and transaction data

transcript_fr_df2[:5]| customer_id | event | time | money_spent | offer_id | money_gained | offer completed | offer received | offer viewed | transaction | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 78afa995795e4d85b5d9ceeca43f5fef | offer received | 0.0 | 0.0 | 9b98b8c7a33c4b65b9aebfe6a799e6d9 | 0.0 | 0 | 1 | 0 | 0 |

| 2 | e2127556f4f64592b11af22de27a7932 | offer received | 0.0 | 0.0 | 2906b810c7d4411798c6938adc9daaa5 | 0.0 | 0 | 1 | 0 | 0 |

| 5 | 389bc3fa690240e798340f5a15918d5c | offer received | 0.0 | 0.0 | f19421c1d4aa40978ebb69ca19b0e20d | 0.0 | 0 | 1 | 0 | 0 |

| 7 | 2eeac8d8feae4a8cad5a6af0499a211d | offer received | 0.0 | 0.0 | 3f207df678b143eea3cee63160fa8bed | 0.0 | 0 | 1 | 0 | 0 |

| 8 | aa4862eba776480b8bb9c68455b8c2e1 | offer received | 0.0 | 0.0 | 0b1e1539f2cc45b7b9fa7c272da2e1d7 | 0.0 | 0 | 1 | 0 | 0 |

transcript_fr_df2.rename(columns= {'offer completed': 'offer_completed'}, inplace=True)

transcript_fr_df2.rename(columns={'offer received': 'offer_received'}, inplace=True)

transcript_fr_df2.rename(columns={'offer viewed': 'offer_viewed'}, inplace=True)profile_fr_df2[:5]| gender | customer_id | year | quarter | age_group | income_range | member_type | |

|---|---|---|---|---|---|---|---|

| 1 | F | 0610b486422d4921ae7d2bf64640c50b | 2017 | 3 | 3 | 5 | 1.0 |

| 3 | F | 78afa995795e4d85b5d9ceeca43f5fef | 2017 | 2 | 4 | 5 | 1.0 |

| 5 | M | e2127556f4f64592b11af22de27a7932 | 2018 | 2 | 4 | 3 | 1.0 |

| 8 | M | 389bc3fa690240e798340f5a15918d5c | 2018 | 1 | 4 | 3 | 1.0 |

| 12 | M | 2eeac8d8feae4a8cad5a6af0499a211d | 2017 | 4 | 3 | 3 | 1.0 |

portfolio_fr_df2[:5]| possible_reward | spent_required | offer_duration_days | offer_type | offer_id | channel_email | channel_mobile | channel_social | channel_web | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 10 | 10 | 7 | bogo | ae264e3637204a6fb9bb56bc8210ddfd | 1 | 1 | 1 | 0 |

| 1 | 10 | 10 | 5 | bogo | 4d5c57ea9a6940dd891ad53e9dbe8da0 | 1 | 1 | 1 | 1 |

| 2 | 0 | 0 | 4 | informational | 3f207df678b143eea3cee63160fa8bed | 1 | 1 | 0 | 1 |

| 3 | 5 | 5 | 7 | bogo | 9b98b8c7a33c4b65b9aebfe6a799e6d9 | 1 | 1 | 0 | 1 |

| 4 | 5 | 20 | 10 | discount | 0b1e1539f2cc45b7b9fa7c272da2e1d7 | 1 | 0 | 0 | 1 |

Merge the transcript, portfolio and profile data

trans_prof = pd.merge(transcript_fr_df2, profile_fr_df2, on='customer_id', how='left')df=pd.merge(trans_prof, portfolio_fr_df2, on='offer_id', how='left')df[:5]| customer_id | event | time | money_spent | offer_id | money_gained | offer_completed | offer_received | offer_viewed | transaction | ... | income_range | member_type | possible_reward | spent_required | offer_duration_days | offer_type | channel_email | channel_mobile | channel_social | channel_web | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 78afa995795e4d85b5d9ceeca43f5fef | offer received | 0.0 | 0.0 | 9b98b8c7a33c4b65b9aebfe6a799e6d9 | 0.0 | 0 | 1 | 0 | 0 | ... | 5 | 1.0 | 5.0 | 5.0 | 7.0 | bogo | 1.0 | 1.0 | 0.0 | 1.0 |

| 1 | e2127556f4f64592b11af22de27a7932 | offer received | 0.0 | 0.0 | 2906b810c7d4411798c6938adc9daaa5 | 0.0 | 0 | 1 | 0 | 0 | ... | 3 | 1.0 | 2.0 | 10.0 | 7.0 | discount | 1.0 | 1.0 | 0.0 | 1.0 |

| 2 | 389bc3fa690240e798340f5a15918d5c | offer received | 0.0 | 0.0 | f19421c1d4aa40978ebb69ca19b0e20d | 0.0 | 0 | 1 | 0 | 0 | ... | 3 | 1.0 | 5.0 | 5.0 | 5.0 | bogo | 1.0 | 1.0 | 1.0 | 1.0 |

| 3 | 2eeac8d8feae4a8cad5a6af0499a211d | offer received | 0.0 | 0.0 | 3f207df678b143eea3cee63160fa8bed | 0.0 | 0 | 1 | 0 | 0 | ... | 3 | 1.0 | 0.0 | 0.0 | 4.0 | informational | 1.0 | 1.0 | 0.0 | 1.0 |

| 4 | aa4862eba776480b8bb9c68455b8c2e1 | offer received | 0.0 | 0.0 | 0b1e1539f2cc45b7b9fa7c272da2e1d7 | 0.0 | 0 | 1 | 0 | 0 | ... | 3 | 1.0 | 5.0 | 20.0 | 10.0 | discount | 1.0 | 0.0 | 0.0 | 1.0 |

5 rows × 24 columns

There are 10 different offers that were rolled out to customers. Change the offer id to indicate the type of offer

df.loc[df.offer_id == "ae264e3637204a6fb9bb56bc8210ddfd", "offer_id"] = "bogo_1"

df.loc[df.offer_id == "9b98b8c7a33c4b65b9aebfe6a799e6d9", "offer_id"] = "bogo_3"

df.loc[df.offer_id == "2298d6c36e964ae4a3e7e9706d1fb8c2", "offer_id"] = "discount_2"

df.loc[df.offer_id == "2906b810c7d4411798c6938adc9daaa5", "offer_id"] = "discount_4"

df.loc[df.offer_id == "f19421c1d4aa40978ebb69ca19b0e20d", "offer_id"] = "bogo_2"

df.loc[df.offer_id == "3f207df678b143eea3cee63160fa8bed", "offer_id"] = "info_1"

df.loc[df.offer_id == "0b1e1539f2cc45b7b9fa7c272da2e1d7", "offer_id"] = "discount_1"

df.loc[df.offer_id == "4d5c57ea9a6940dd891ad53e9dbe8da0", "offer_id"] = "bogo_4"

df.loc[df.offer_id == "5a8bc65990b245e5a138643cd4eb9837", "offer_id"] = "info_2"Save the merged and cleaned dataframe

cleaned_df=df.copy() #remember to change the name of the other merged df from df to not confuse thingsGet Customer offer (received,viewed and completed) info per customer by offer type

This function takes in the merged data and collects the offer data of whether an offer was received, viewed and completed, annd does this for each offer type that was sent to the customer

def cust_offer_type(cleaned_df, offer_type=None):

''' Get offer data (received, viewed and completed) per customer by offer type

INPUT:

cleaned_df - merged transactions, portfolio, and profile datasets

offer_type - bogo, discount, informational

RETURNS:

offer_type_cust - offer type data (received, viewed and completed) per customer

'''

# Define dict

data = dict()

for e in ['received', 'viewed', 'completed']:

# Get 'completed' data for informational offers

if offer_type == 'informational' and e == 'completed':

continue

flag = (cleaned_df['offer_{}'.format(e)] == 1)

key = e

if offer_type:

flag = flag & (cleaned_df.offer_type == offer_type)

key = '{}_'.format(offer_type) + key

data[key] = cleaned_df[flag].groupby('customer_id').offer_id.count()

# Get 'reward' data for informational offers

flag = (cleaned_df.offer_completed == 1)

if offer_type != 'informational':

key = 'money_gained'

if offer_type:

flag = flag & (cleaned_df.offer_type == offer_type)

key = '{}_'.format(offer_type) + key

data[key] = cleaned_df[flag].groupby('customer_id').money_gained.sum()

return dataGet offer data per customer by offer id

Remember that there are 10 different offer ids which a customer could potentially receive. We create a function that collects the offer data for each customer for each offer id and returns offer id sent to each customer

def cust_offer_id(cleaned_df, offer_id):

''' Get offer data (received, viewed and completed) per customer by offer id

INPUT:

cleaned_df - merged transactions, portfolio, and profile datasets

offer_id - 'bogo_1','bogo_2','bogo_3','bogo_4','discount_1','discount_2','discount_3','discount_4','info_1','info_2'

RETURNS:

cust_offer_id - offer id data per customer

'''

data = dict()

for e in ['received', 'viewed', 'completed']:

# Get 'completed' data for informational offers

if offer_id in ['info_1', 'info_2'] and e == 'completed':

continue

event = 'offer_{}'.format(e)

flag = (cleaned_df[event] == 1) & (cleaned_df.offer_id == offer_id)

key = '{}_{}'.format(offer_id, e)

data[key] = cleaned_df[flag].groupby('customer_id').offer_id.count()

# Get 'reward' data for informational offers

flag = (cleaned_df.offer_completed == 1) & (cleaned_df.offer_id == offer_id)

if offer_id not in ['info_1', 'info_2']:

key = '{}_money_gained'.format(offer_id)

data[key] = cleaned_df[flag].groupby('customer_id').money_gained.sum()

return dataCreate merged customer dataframe with aggregated transaction, offer data and demographics

Having collected the offer data per customer for each offer type and offer id, we now merge the datasets and add this data

def merged_cust(cleaned_df, profile_fr_df2):

''' Build a dataframe with aggregated purchase and offer data and demographics

INPUT:

cleaned_df - merged transactions, portfolio, and profile datasets

RETURNS:

merged_cust - df with aggregated customer data

'''

cust_dict = dict()

# Get total transaction data

transactions = cleaned_df[cleaned_df.transaction == 1].groupby('customer_id')

cust_dict['total_expense'] = transactions.money_spent.sum()

cust_dict['total_transactions'] = transactions.money_spent.count()

# Get aggregate offer data

cust_dict.update(cust_offer_type(cleaned_df))

# Get offer type data

for ot in ['bogo', 'discount', 'informational']:

cust_dict.update(cust_offer_type(cleaned_df, ot))

# Get offer id data

for oi in ['bogo_1','bogo_2','bogo_3','bogo_4',

'discount_1','discount_2','discount_3','discount_4',

'info_1','info_2']:

cust_dict.update(cust_offer_id(cleaned_df, oi))

# Build df, aggregate dict values and demographic data

merged_cust = pd.concat(cust_dict.values(), axis=1, sort=False);

merged_cust.columns = cust_dict.keys()

merged_cust.fillna(0, inplace=True)

merged_cust = pd.merge(merged_cust, profile_fr_df2.set_index('customer_id'),

left_index=True, right_index=True)

# Add columns for net expense

merged_cust['net_expense'] = merged_cust['total_expense'] - merged_cust['money_gained']

return merged_cust

# Show merged df

merged_cust = merged_cust(cleaned_df, profile_fr_df2)

merged_cust.head()| total_expense | total_transactions | received | money_gained | viewed | completed | bogo_received | bogo_money_gained | bogo_viewed | bogo_completed | ... | info_1_viewed | info_2_received | info_2_viewed | gender | year | quarter | age_group | income_range | member_type | net_expense | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0009655768c64bdeb2e877511632db8f | 127.60 | 8.0 | 5.0 | 9.0 | 4.0 | 3.0 | 1.0 | 5.0 | 1.0 | 1.0 | ... | 1.0 | 1.0 | 1.0 | M | 2017 | 2 | 2 | 4 | 1.0 | 118.60 |

| 0011e0d4e6b944f998e987f904e8c1e5 | 79.46 | 5.0 | 5.0 | 13.0 | 5.0 | 3.0 | 1.0 | 5.0 | 1.0 | 1.0 | ... | 1.0 | 1.0 | 1.0 | O | 2018 | 1 | 3 | 3 | 1.0 | 66.46 |

| 0020c2b971eb4e9188eac86d93036a77 | 196.86 | 8.0 | 5.0 | 14.0 | 3.0 | 3.0 | 2.0 | 10.0 | 1.0 | 1.0 | ... | 0.0 | 1.0 | 1.0 | F | 2016 | 1 | 3 | 4 | 2.0 | 182.86 |

| 0020ccbbb6d84e358d3414a3ff76cffd | 154.05 | 12.0 | 4.0 | 13.0 | 4.0 | 3.0 | 2.0 | 10.0 | 2.0 | 2.0 | ... | 0.0 | 1.0 | 1.0 | F | 2016 | 4 | 2 | 3 | 2.0 | 141.05 |

| 003d66b6608740288d6cc97a6903f4f0 | 48.34 | 18.0 | 5.0 | 9.0 | 4.0 | 3.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 1.0 | 1.0 | 1.0 | F | 2017 | 2 | 2 | 4 | 1.0 | 39.34 |

5 rows × 59 columns

The result is a merged customer dataset that shows the total expenses,net expense, total offers viewed, received,completed by each customer and which offer types and offer ids

Get any customer offer information grouped by any column of your choosing

This function will take any column in the merged customer dataframe and return the offer status aggregates grouped by a column of your choosing

def offer_status(merged_cust, stat, offer, by_col, aggr='sum'):

''' Get any column for customers that received but not viewed an offer, viewed but not completed the offer,

and those that viewed and completed the offer, grouped by a column

INPUT:

merged_cust - aggregated offer and demographic df

stat - column of interest

offer - offer of interest

by_col - column used to group the data

aggr - aggregation method sum or mean

RETURNS:

(received_agg, viewed_agg, completed) - tuple with sum aggregation

'''

# Define dict

received_col = '{}_received'.format(offer)

viewed_col = '{}_viewed'.format(offer)

received = (merged_cust[received_col] > 0) & (merged_cust[viewed_col] == 0)

completed = None

# Aggregate customer behavior data for schema

if offer not in ['informational', 'info_1', 'info_2']:

completed_col = '{}_completed'.format(offer)

viewed = (merged_cust[viewed_col] > 0) & (merged_cust[completed_col] == 0)

completed_off = (merged_cust[completed_col] > 0)

if aggr == 'sum':

completed = merged_cust[completed_off].groupby(by_col)[stat].sum()

elif aggr == 'mean':

completed = merged_cust[completed_off].groupby(by_col)[stat].mean()

else:

viewed = (merged_cust[viewed_col] > 0)

if aggr == 'sum':

received_agg = merged_cust[received].groupby(by_col)[stat].sum()

viewed_agg = merged_cust[viewed].groupby(by_col)[stat].sum()

elif aggr == 'mean':

received_agg = merged_cust[received].groupby(by_col)[stat].mean()

viewed_agg = merged_cust[viewed].groupby(by_col)[stat].mean()

return received_agg, viewed_agg, completedCalculate the average expense for customers according to offer data

Calculate the average expense for all customers to compare the average expense for those that, received, viewed, completed the offer and the permututations therewith

def avg_expense(merged_cust, offer, by_col):

''' Get the average expense for customers that received but not viewed an offer, viewed but not completed the offer, and those that viewed and completed the offer, group by a column

INPUT:

merged_cust - aggregated offer and demographic df

offer - offer of interest

by_col - column used to group the data

RETURNS:

(received, viewed, completed) - tuple with the average expense

'''

# Get totals

received_total, viewed_total, completed_total = offer_status(merged_cust,

'total_expense',

offer, by_col)

received_trans, viewed_trans, completed_trans = offer_status(merged_cust,

'total_transactions',

offer, by_col)

# Calculate averages for received and viewed offers

received_avg = received_total / received_trans

received_avg.fillna(0, inplace=True)

viewed_avg = viewed_total / viewed_trans

viewed_avg.fillna(0, inplace=True)

completed_avg = None

if offer not in ['informational', 'info_1', 'info_2']:

completed_avg = completed_total / completed_trans

return received_avg, viewed_avg, completed_avgPlot the average expense per transaction for each customer per offer type

Plot according to whether they received, viewed and or completed an offer. The plot shows the offer type and the average expense

def offer_earnings_plot(merged_cust, offer):

''' Plot the average expense per transaction for customersthat have received, viewed and completed an offer.

INPUT:

merged_cust - customer df

offer - offer type

RETURNS:

(age, incomme, and gender) - plots

'''

# Set palette

plt.rcParams["image.cmap"] = "Set1"

plt.rcParams['axes.prop_cycle'] = plt.cycler(color=plt.cm.Set1.colors)

# Define variables

received_by = dict()

viewed_by = dict()

completed_by = dict()

received_avg_by = dict()

viewed_avg_by = dict()

completed_avg_by = dict()

# Aggregate data by age, income, and gender

for key in ['age_group', 'income_range', 'gender']:

received_by[key], viewed_by[key], completed_by[key] = offer_status(merged_cust,

'net_expense',

offer, key,

aggr='mean')

by = avg_expense(merged_cust, offer, key)

received_avg_by[key], viewed_avg_by[key], completed_avg_by[key] = by

# Plot layout

plt.figure(figsize=(16, 10))

# Plot offer expense by 'bogo'

plt.subplot(231)

plt.plot(received_avg_by['age_group'], label='{}-received'.format(offer))

plt.plot(viewed_avg_by['age_group'], label='{}-viewed'.format(offer))

if offer not in ['informational', 'info_1', 'info_2']:

plt.plot(completed_avg_by['age_group'], label='{}-completed'.format(offer))

plt.legend(loc='upper left')

plt.title('Average Transaction Value by Age')

plt.xlabel('Age')

plt.ylabel('USD');

# Plot offer expense by 'discount'

plt.subplot(232)

plt.plot(received_avg_by['income_range'], label='{}-received'.format(offer))

plt.plot(viewed_avg_by['income_range'], label='{}-viewed'.format(offer))

if offer not in ['informational', 'info_1', 'info_2']:

plt.plot(completed_avg_by['income_range'], label='{}-completed'.format(offer))

plt.legend(loc='upper left')

plt.title('Average Transaction Value by Income')

plt.xlabel('Income')

plt.ylabel('USD');

# Plot offer expense by 'informational'

plt.subplot(233)

index = np.array([0, 1, 2])

bar_width = 0.3

plt.bar(index, received_avg_by['gender'].reindex(['M', 'F', 'O']), bar_width,

label='{}-received'.format(offer))

plt.bar(index + bar_width, viewed_avg_by['gender'].reindex(['M', 'F', 'O']),

bar_width, label='{}-viewed'.format(offer))

if offer not in ['informational', 'info_1', 'info_2']:

plt.bar(index+2*bar_width, completed_avg_by['gender'].reindex(['M', 'F', 'O']),

bar_width, label='{}-completed'.format(offer))

plt.legend(loc='upper left')

plt.title('Average Transaction Value by Gender')

plt.xticks(index + bar_width, ('Male', 'Female', 'Other'))

plt.xlabel('Gender')

plt.ylabel('USD');Map the values back to their categorical variables for analysis

merged_cust['income_range'] = merged_cust['income_range'].map({1: 'low', 2: 'low_to_mid', 3:'mid',4:'mid_to_high',5:'high'})

merged_cust['age_group'] = merged_cust['age_group'].map({1: 'teenager', 2: 'young-adult', 3:'adult', 4:'elderly'})

merged_cust['member_type'] = merged_cust['member_type'].map({1: 'new', 2: 'regular', 3:'loyal'})Plot bogo offer earnings

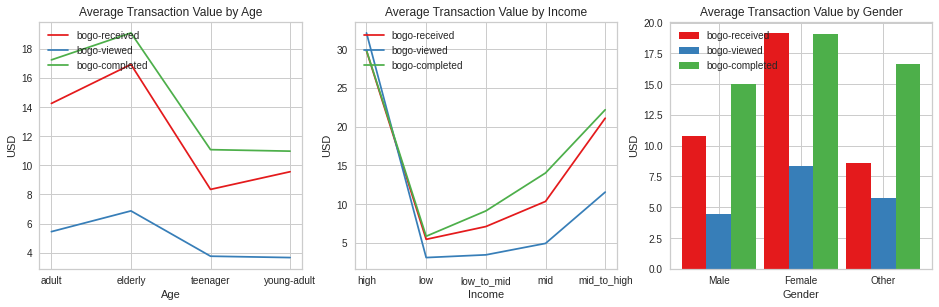

offer_earnings_plot(merged_cust, 'bogo')

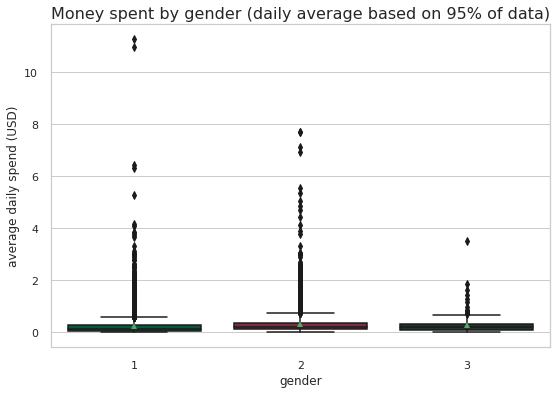

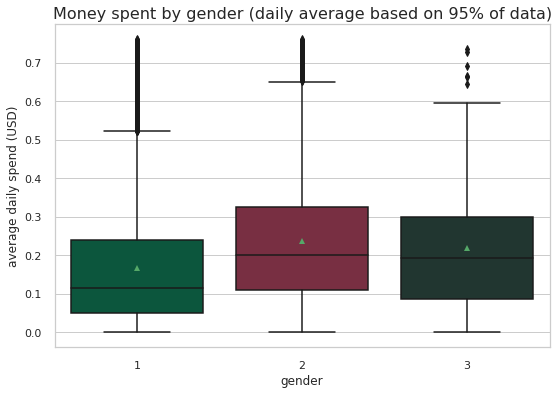

The pattern in terms of offers, received, viewed and completed is the same accross age, income and gender demographics.

The elderly have the highest average transaction for BOGO offers. In general, as age increases, customers spend more on BOGO.

High income earners spend the highest on BOGO followed by mid-to-high earners. In general we observe an increase in spending with an increase in income.

Females spend the most on BOGO but in terms of view rate, those that did not specfy gender spent more on BOGO if we compare how many received vs how many completed BOGO.

Plot discount offer earnings

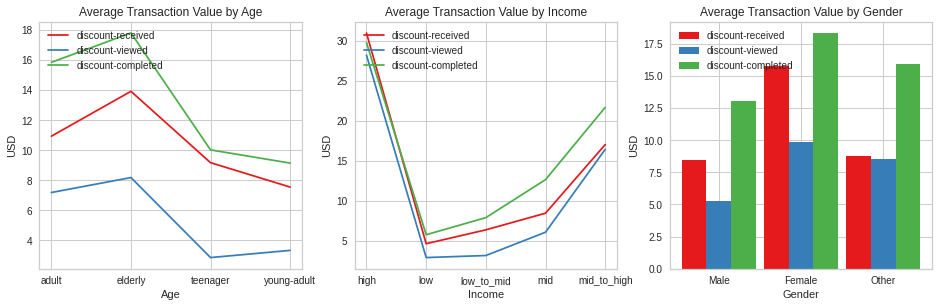

offer_earnings_plot(merged_cust, 'discount')

The same trends we observed for BOGO seem to apply here as well.

Transactions increase with an increase in age with those above 60 spending the most on discounts.

High income earners will spend an average of 30USD on discount offers. Mid to high earners will also spent a lot on discount

Females are also the highest spenders on discount earners and again we see those with unspecified gender have a higher conversion rate of all demographics

Plot informational offer earnings

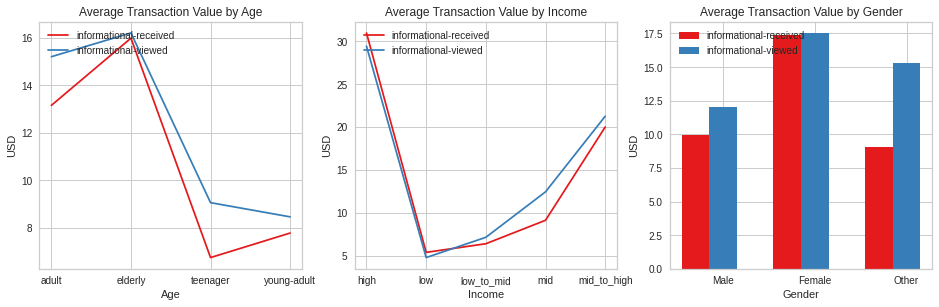

offer_earnings_plot(merged_cust, 'informational')

Informational offers follow the same pattern seen on all offers.

Get the money gained by customers for completed offers

def net_earnings(merged_cust, offer, q=0.5):

'''Get the net_earnings for customers that viewed and completed offers

INPUT:

offer - offer of interest

q - quantile to be used

RETURNS:

net_earnings - median of total transaction value

'''

# Flag customers that viewed offers

flag = (merged_cust['{}_viewed'.format(offer)] > 0)

# Sort through positive net earnings

flag = flag & (merged_cust.net_expense > 0)

# Aggregate those with at least 5 transactions

flag = flag & (merged_cust.total_transactions >= 5)

# Aggregate viewed and completed offers

if offer not in ['info_1', 'info_2']:

flag = flag & (merged_cust['{}_completed'.format(offer)] > 0)

return merged_cust[flag].net_expense.quantile(q)net_earnings(merged_cust,'bogo')129.85999999999999# Define loop that sorts by highest earnings

def greatest_earnings(merged_cust, n_top=2, q=0.5, offers=None):

'''Sort offers based on the ones that result in the highest net_expense

INPUT:

customers - dataframe with aggregated data of the offers

n_top - number of offers to be returned (default: 2)

q - quantile used for sorting

offers - list of offers to be sorted

RETURNS:

sorted list of offers, in descending order according to the median net_expense

'''

# Sort for offers earnings in second quantile

if not offers:

offers = ['bogo_1','bogo_2','bogo_3','bogo_4',

'discount_1','discount_2','discount_3','discount_4',

'info_1','info_2']

offers.sort(key=lambda x: net_earnings(merged_cust, x, q), reverse=True)

offers_dict = {o: net_earnings(merged_cust, o, q) for o in offers}

return offers[:n_top], offers_dict# Print 5 offers by most to least popular, highest to least highest earnings

offers = greatest_earnings(merged_cust, n_top=5)offers(['discount_1', 'bogo_1', 'bogo_4', 'bogo_3', 'bogo_2'],

{'discount_1': 145.425,

'bogo_1': 145.325,

'bogo_4': 145.23000000000002,

'bogo_3': 131.14,

'bogo_2': 131.03,

'discount_2': 124.99000000000001,

'discount_3': nan,

'discount_4': 138.07999999999998,

'info_1': 124.17000000000002,

'info_2': 102.96000000000001})Create another merge for more analysis

Create a new dataframe that will combine transaction data with offer type such that the data about offers received, viewed and completed for each offer type are in one row.

updated_cleaned_transcript=pd.read_csv('./updated_clean_transcript.csv')portfolio_clean| possible_reward | spent_required | offer_duration_days | offer_type | offer_id | channel_email | channel_mobile | channel_social | channel_web | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 10 | 10 | 7 | 1 | ae264e3637204a6fb9bb56bc8210ddfd | 1 | 1 | 1 | 0 |

| 1 | 10 | 10 | 5 | 1 | 4d5c57ea9a6940dd891ad53e9dbe8da0 | 1 | 1 | 1 | 1 |

| 2 | 0 | 0 | 4 | 3 | 3f207df678b143eea3cee63160fa8bed | 1 | 1 | 0 | 1 |

| 3 | 5 | 5 | 7 | 1 | 9b98b8c7a33c4b65b9aebfe6a799e6d9 | 1 | 1 | 0 | 1 |

| 4 | 5 | 20 | 10 | 2 | 0b1e1539f2cc45b7b9fa7c272da2e1d7 | 1 | 0 | 0 | 1 |

| 5 | 3 | 7 | 7 | 2 | 2298d6c36e964ae4a3e7e9706d1fb8c2 | 1 | 1 | 1 | 1 |

| 6 | 2 | 10 | 10 | 2 | fafdcd668e3743c1bb461111dcafc2a4 | 1 | 1 | 1 | 1 |

| 7 | 0 | 0 | 3 | 3 | 5a8bc65990b245e5a138643cd4eb9837 | 1 | 1 | 1 | 0 |

| 8 | 5 | 5 | 5 | 1 | f19421c1d4aa40978ebb69ca19b0e20d | 1 | 1 | 1 | 1 |

| 9 | 2 | 10 | 7 | 2 | 2906b810c7d4411798c6938adc9daaa5 | 1 | 1 | 0 | 1 |

profile[:5]| gender | customer_id | year | quarter | age_group | income_range | member_type | |

|---|---|---|---|---|---|---|---|

| 1 | F | 0610b486422d4921ae7d2bf64640c50b | 2017 | 3 | 3 | 5 | 1.0 |

| 3 | F | 78afa995795e4d85b5d9ceeca43f5fef | 2017 | 2 | 4 | 5 | 1.0 |

| 5 | M | e2127556f4f64592b11af22de27a7932 | 2018 | 2 | 4 | 3 | 1.0 |

| 8 | M | 389bc3fa690240e798340f5a15918d5c | 2018 | 1 | 4 | 3 | 1.0 |

| 12 | M | 2eeac8d8feae4a8cad5a6af0499a211d | 2017 | 4 | 3 | 3 | 1.0 |

profile_transcript_df=profile.merge(updated_cleaned_transcript, on='customer_id', how='right')len(profile_transcript_df) == len(updated_cleaned_transcript)Truecomplete_df = portfolio_clean.merge(profile_transcript_df, on='offer_id', how='right')Create a copy of the merged dataframe for EDA

master_df=complete_df.copy()Create another copy that we will use for customer segmentation

clustering_df=master_df.copy()# reconverting the values of the following features from numerical values to its original categorical values.

master_df['offer_type'] = master_df['offer_type'].map({1: 'BOGO', 2: 'Discount', 3: 'Informational'})

master_df['income_range'] = master_df['income_range'].map({1: 'low', 2: 'low_to_mid', 3:'mid',4:'mid_to_high',5:'high'})

master_df['age_group'] = master_df['age_group'].map({1: 'teenager', 2: 'young-adult', 3:'adult', 4:'elderly'})

master_df['member_type'] = master_df['member_type'].map({1: 'new', 2: 'regular', 3:'loyal'})master_df['offer_type'].value_counts()BOGO 50077

Discount 47491

Informational 22660

Name: offer_type, dtype: int64Connect “transaction” with “informational” offer

We want to connect informational offer with transaction done. Such that all the offer completed rows can show the offer type that was completed. Then all the remaining transactions will be for uncompleted offers. To find such transactions, we need to make sure that:

- Offer type is “informational”

- Customer saw the offer - “offer_viewed” is true

- The same customer made a purchase within offer validity period

%time

##added offer received to see what it does

print("Total rows: {}".format(len(master_df)))

print('Total "transaction" rows: {}'.format(len(master_df[master_df.event == "transaction"])))

rows_to_drop = []

# Check all informational offers that were viewed:

informational_df = master_df.loc[(master_df.offer_type == "Informational") & (master_df.event == "offer viewed")]

for index, row in informational_df.iterrows():

customer = master_df.at[index, 'customer_id'] # get customer id

duration_hrs = master_df.at[index, 'offer_duration_days'] # get offer duration

viewed_time = master_df.at[index, 'time'] # get the time when offer was viewed

# Check all transactions of this particular customer:

transaction_df = master_df.loc[(master_df.event == "transaction") & (master_df.customer_id == customer)]

for transaction_index, transaction_row in transaction_df.iterrows():

if master_df.at[transaction_index, 'time'] >= viewed_time: # transaction was AFTER offer was viewed

if (master_df.at[transaction_index, 'time'] - viewed_time) >= duration_hrs: # offer was still VALID

print("Updating row nr. " + str(index)

+ " "*100, end="\r") # erase output and print on the same line

# Update the event (offer viewed) row:

master_df.at[index, 'money_spent'] = master_df.at[transaction_index, 'money_spent']

master_df.at[index, 'event'] = "offer completed"

master_df.at[index, 'offer_completed'] = 1

master_df.at[index, 'transaction'] = 1

master_df.at[index, 'time'] = master_df.at[transaction_index, 'time']

# Drop the transaction row later:

rows_to_drop.append(transaction_index)

break # to skip any later transactions and just use the first one that matches our search

master_df = master_df[~master_df.index.isin(rows_to_drop)] # faster alternative to dropping rows one by one

print("Rows after job is finished: {}".format(len(master_df)))

print("Transaction rows after job is finished: {}".format(len(master_df[master_df.event == "transaction"])))

master_df[(master_df.offer_type == "Informational") & (master_df.event == "offer completed")].head(3)CPU times: user 6 µs, sys: 0 ns, total: 6 µs

Wall time: 11.7 µs

Total rows: 214604

Total "transaction" rows: 94376

Rows after job is finished: 208256

Transaction rows after job is finished: 88028| possible_reward | spent_required | offer_duration_days | offer_type | offer_id | channel_email | channel_mobile | channel_social | channel_web | gender | ... | Unnamed: 0 | event | time | money_spent | money_gained | offer_completed | offer_received | offer_viewed | transaction | auto_completed | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 105909 | 0.0 | 0.0 | 3.0 | Informational | 5a8bc65990b245e5a138643cd4eb9837 | 1.0 | 1.0 | 1.0 | 0.0 | F | ... | 85291 | offer completed | 15.75 | 23.93 | 0.0 | 1 | 0 | 1 | 1 | 0 |

| 105911 | 0.0 | 0.0 | 3.0 | Informational | 5a8bc65990b245e5a138643cd4eb9837 | 1.0 | 1.0 | 1.0 | 0.0 | F | ... | 123541 | offer completed | 19.00 | 23.30 | 0.0 | 1 | 0 | 1 | 1 | 0 |

| 105913 | 0.0 | 0.0 | 3.0 | Informational | 5a8bc65990b245e5a138643cd4eb9837 | 1.0 | 1.0 | 1.0 | 0.0 | F | ... | 77214 | offer completed | 15.00 | 22.99 | 0.0 | 1 | 0 | 1 | 1 | 0 |

3 rows × 26 columns

print("Number of informational offers that were viewed but not completed:")

len(master_df[(master_df.offer_type == "Informational") & (master_df.event == "offer viewed")])Number of informational offers that were viewed but not completed:2778Connect “transaction” with “bogo” offer

We want to connect bogo offer with transaction done. Such that all the offer completed rows can show the offer type that was completed. Then all the remaining transactions will be for uncompleted offers. To find such transactions, we need to make sure that:

- Offer type is “informational”

- Customer received saw the offer - “offer_viewed” is true

- The same customer made a purchase within offer validity period

%time

##added offer received to see what it does

print("Total rows: {}".format(len(master_df)))

print('Total "transaction" rows: {}'.format(len(master_df[master_df.event == "transaction"])))

rows_to_drop = []

# Check all informational offers that were viewed:

informational_df = master_df.loc[(master_df.offer_type == "BOGO") & (master_df.event == "offer viewed")]

for index, row in informational_df.iterrows():

customer = master_df.at[index, 'customer_id'] # get customer id

duration_hrs = master_df.at[index, 'offer_duration_days'] # get offer duration

viewed_time = master_df.at[index, 'time'] # get the time when offer was viewed

# Check all transactions of this particular customer:

transaction_df = master_df.loc[(master_df.event == "transaction") & (master_df.customer_id == customer)]

for transaction_index, transaction_row in transaction_df.iterrows():

if master_df.at[transaction_index, 'time'] >= viewed_time: # transaction was AFTER offer was viewed

if (master_df.at[transaction_index, 'time'] - viewed_time) >= duration_hrs: # offer was still VALID

print("Updating row nr. " + str(index)

+ " "*100, end="\r") # erase output and print on the same line

# Update the event (offer viewed) row:

master_df.at[index, 'money_spent'] = master_df.at[transaction_index, 'money_spent']

master_df.at[index, 'event'] = "offer completed"

master_df.at[index, 'offer_completed'] = 1

master_df.at[index, 'transaction'] = 1

master_df.at[index, 'time'] = master_df.at[transaction_index, 'time']

# Drop the transaction row later:

rows_to_drop.append(transaction_index)

break # to skip any later transactions and just use the first one that matches our search

master_df = master_df[~master_df.index.isin(rows_to_drop)] # faster alternative to dropping rows one by one

print("Rows after job is finished: {}".format(len(master_df)))

print("Transaction rows after job is finished: {}".format(len(master_df[master_df.event == "transaction"])))

master_df[(master_df.offer_type == "BOGO") & (master_df.event == "offer completed")].head(3)CPU times: user 7 µs, sys: 0 ns, total: 7 µs

Wall time: 11.7 µs

Total rows: 208256

Total "transaction" rows: 88028

Rows after job is finished: 203163

Transaction rows after job is finished: 82935| possible_reward | spent_required | offer_duration_days | offer_type | offer_id | channel_email | channel_mobile | channel_social | channel_web | gender | ... | Unnamed: 0 | event | time | money_spent | money_gained | offer_completed | offer_received | offer_viewed | transaction | auto_completed | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 5.0 | 5.0 | 7.0 | BOGO | 9b98b8c7a33c4b65b9aebfe6a799e6d9 | 1.0 | 1.0 | 0.0 | 1.0 | F | ... | 47583 | offer completed | 5.50 | 19.89 | 5.0 | 1 | 0 | 1 | 1 | 0 |

| 6 | 5.0 | 5.0 | 7.0 | BOGO | 9b98b8c7a33c4b65b9aebfe6a799e6d9 | 1.0 | 1.0 | 0.0 | 1.0 | M | ... | 200086 | offer completed | 20.75 | 15.63 | 5.0 | 1 | 0 | 1 | 1 | 0 |

| 18 | 5.0 | 5.0 | 7.0 | BOGO | 9b98b8c7a33c4b65b9aebfe6a799e6d9 | 1.0 | 1.0 | 0.0 | 1.0 | M | ... | 29498 | offer completed | 16.25 | 1.54 | 0.0 | 1 | 0 | 1 | 1 | 0 |

3 rows × 26 columns

print("Number of BOGO offers that were viewed but not completed:")

len(master_df[(master_df.offer_type == "BOGO") & (master_df.event == "offer viewed")])Number of BOGO offers that were viewed but not completed:3958Connect “transaction” with “discount” offer

We want to connect bogo offer with transaction done. Such that all the offer completed rows can show the offer type that was completed. Then all the remaining transactions will be for uncompleted offers. To find such transactions, we need to make sure that:

- Offer type is “discount”

- Customer received and saw the offer - “offer_viewed” is true

- The same customer made a purchase within offer validity period

%time

##added offer received to see what it does

print("Total rows: {}".format(len(master_df)))

print('Total "transaction" rows: {}'.format(len(master_df[master_df.event == "transaction"])))

rows_to_drop = []

# Check all informational offers that were viewed:

informational_df = master_df.loc[(master_df.offer_type == "Discount") & (master_df.event == "offer received")

& (master_df.event == "offer viewed")]

for index, row in informational_df.iterrows():

customer = master_df.at[index, 'customer_id'] # get customer id

duration_hrs = master_df.at[index, 'offer_duration_days'] # get offer duration

viewed_time = master_df.at[index, 'time'] # get the time when offer was viewed

# Check all transactions of this particular customer:

transaction_df = master_df.loc[(master_df.event == "transaction") & (master_df.customer_id == customer)]

for transaction_index, transaction_row in transaction_df.iterrows():

if master_df.at[transaction_index, 'time'] >= viewed_time: # transaction was AFTER offer was viewed

if (master_df.at[transaction_index, 'time'] - viewed_time) >= duration_hrs: # offer was still VALID

print("Updating row nr. " + str(index)

+ " "*100, end="\r") # erase output and print on the same line

# Update the event (offer viewed) row:

master_df.at[index, 'money_spent'] = master_df.at[transaction_index, 'money_spent']

master_df.at[index, 'event'] = "offer completed"

master_df.at[index, 'offer_completed'] = 1

master_df.at[index, 'transaction'] = 1

master_df.at[index, 'time'] = master_df.at[transaction_index, 'time']

# Drop the transaction row later:

rows_to_drop.append(transaction_index)

break # to skip any later transactions and just use the first one that matches our search

master_df = master_df[~master_df.index.isin(rows_to_drop)] # faster alternative to dropping rows one by one

print("Rows after job is finished: {}".format(len(master_df)))

print("Transaction rows after job is finished: {}".format(len(master_df[master_df.event == "transaction"])))

master_df[(master_df.offer_type == "Discount") & (master_df.event == "offer completed")].head(3)CPU times: user 3 µs, sys: 0 ns, total: 3 µs

Wall time: 6.68 µs

Total rows: 203163

Total "transaction" rows: 82935

Rows after job is finished: 203163

Transaction rows after job is finished: 82935| possible_reward | spent_required | offer_duration_days | offer_type | offer_id | channel_email | channel_mobile | channel_social | channel_web | gender | ... | Unnamed: 0 | event | time | money_spent | money_gained | offer_completed | offer_received | offer_viewed | transaction | auto_completed | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 144112 | 2.0 | 10.0 | 7.0 | Discount | 2906b810c7d4411798c6938adc9daaa5 | 1.0 | 1.0 | 0.0 | 1.0 | F | ... | 77217 | offer completed | 8.0 | 27.23 | 2.0 | 1 | 0 | 1 | 1 | 0 |

| 144114 | 2.0 | 10.0 | 7.0 | Discount | 2906b810c7d4411798c6938adc9daaa5 | 1.0 | 1.0 | 0.0 | 1.0 | M | ... | 177270 | offer completed | 18.0 | 11.50 | 2.0 | 1 | 0 | 1 | 1 | 0 |

| 144118 | 2.0 | 10.0 | 7.0 | Discount | 2906b810c7d4411798c6938adc9daaa5 | 1.0 | 1.0 | 0.0 | 1.0 | M | ... | 106954 | offer completed | 13.0 | 115.00 | 2.0 | 1 | 0 | 1 | 1 | 0 |

3 rows × 26 columns

print("Number of Discount offers that were viewed but not completed:")

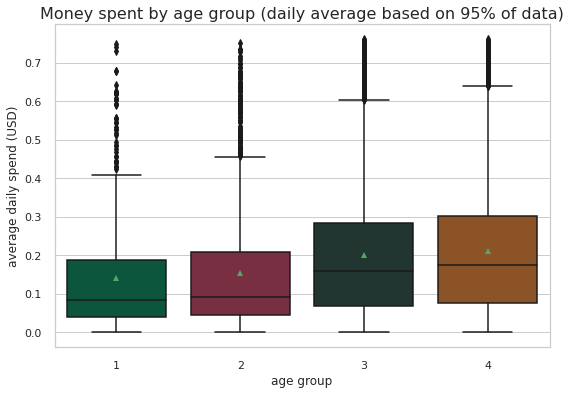

len(master_df[(master_df.offer_type == "Discount") & (master_df.event == "offer viewed")])Number of Discount offers that were viewed but not completed:5460Distribution of Age Groups among customers

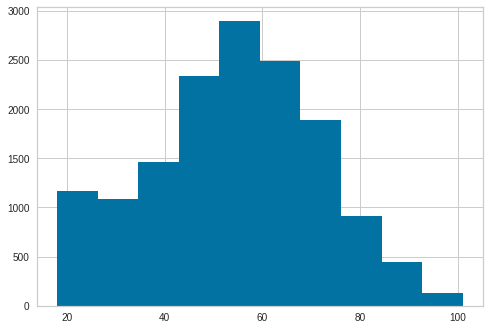

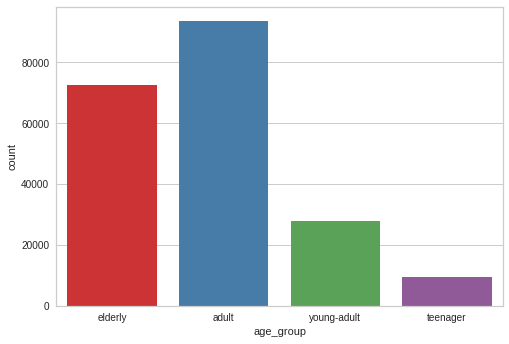

sns.countplot(master_df['age_group'])<AxesSubplot:xlabel='age_group', ylabel='count'>

master_df['age_group'].value_counts()adult 93596

elderly 72478

young-adult 27734

teenager 9355

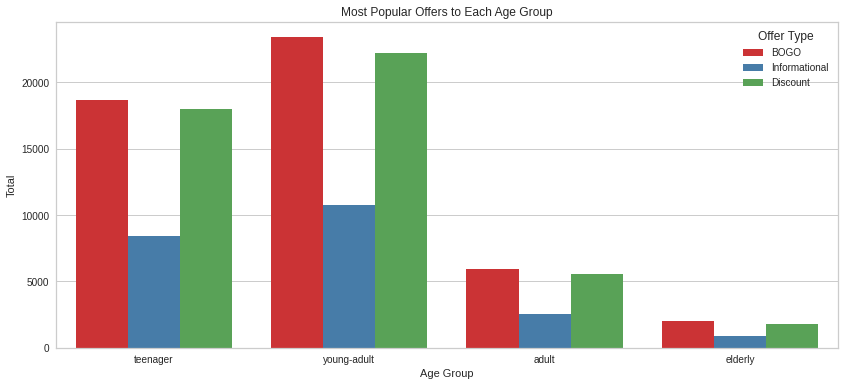

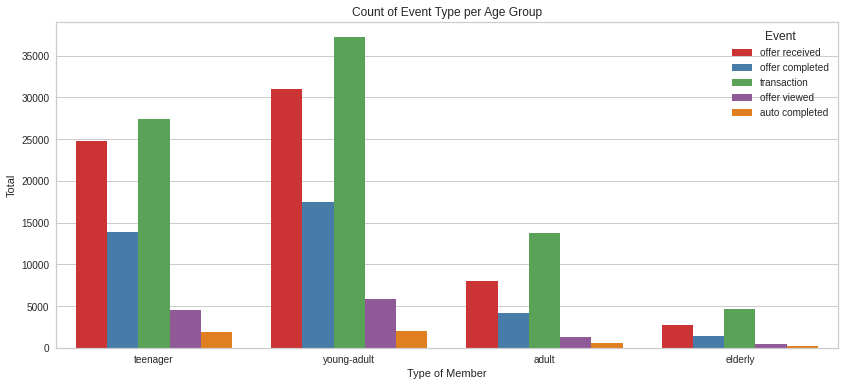

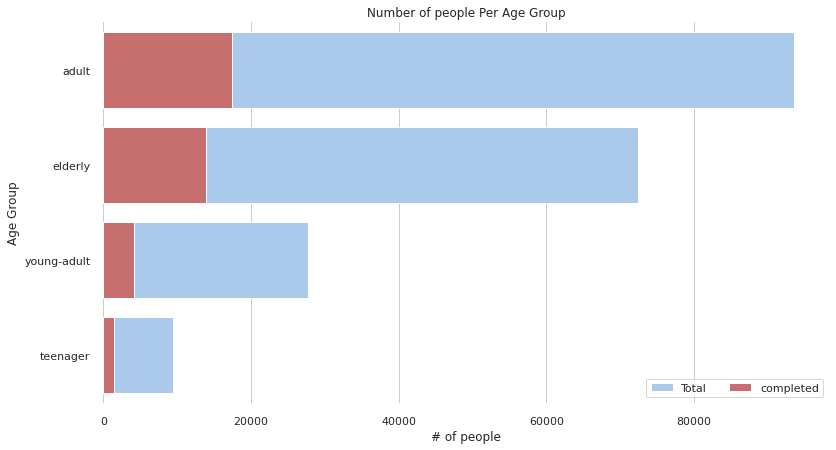

Name: age_group, dtype: int64The distribution of ages among starbucks customers is skewed towards the older group. The elderly are the largest population followed by the adults. Teenagers are the smallest group

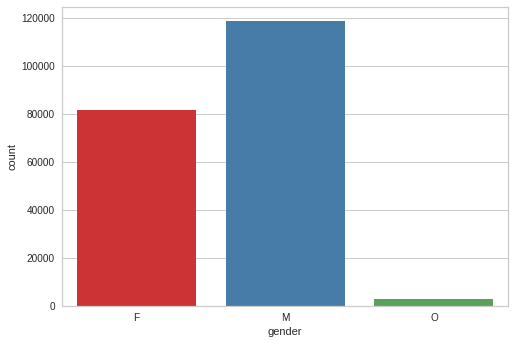

Distribution of Gender among customers

sns.countplot(master_df['gender'])<AxesSubplot:xlabel='gender', ylabel='count'>

master_df['gender'].value_counts()M 118718

F 81552

O 2893

Name: gender, dtype: int6458.6 % of customers are male, 39.8% are female and 1.4% prefer not to specify gender

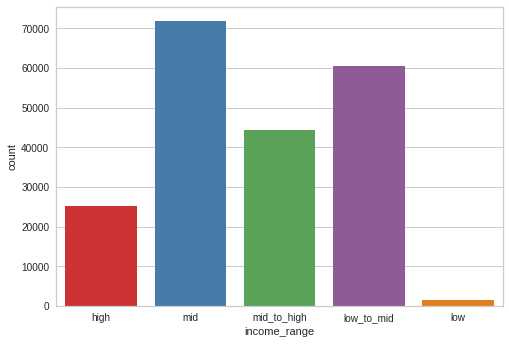

Distribution of income among customers

sns.countplot(master_df['income_range'])<AxesSubplot:xlabel='income_range', ylabel='count'>

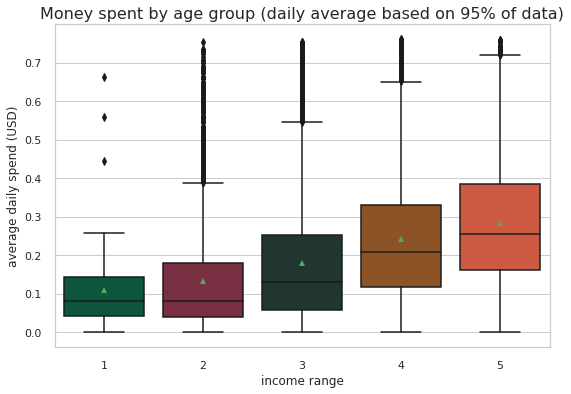

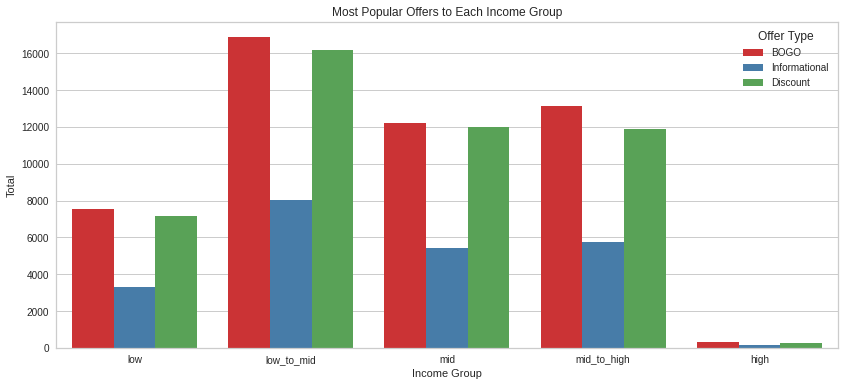

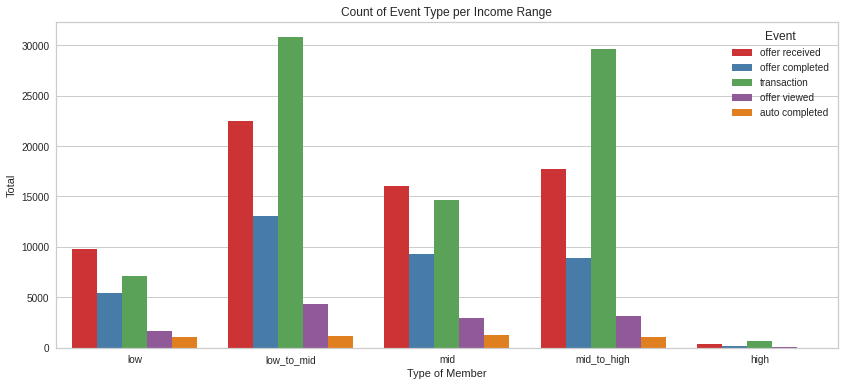

Middle income and low_to_mid income earners are occupy a huge proportion of the population, with mid income earners being the dorminant. Low earners are fewer, they are the least

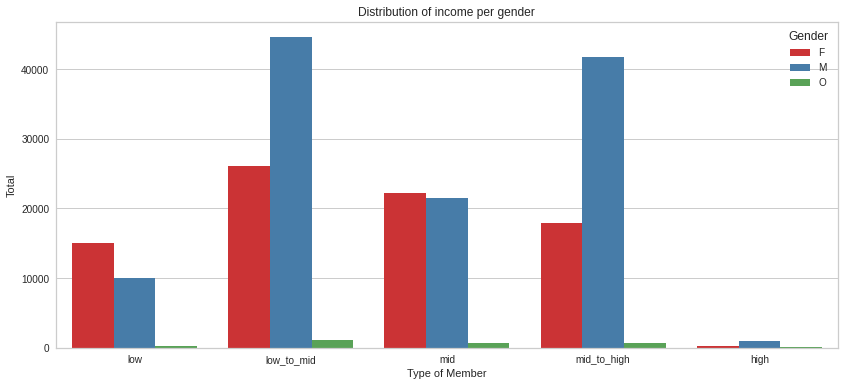

Distribution of income by gender among customers

plt.figure(figsize=(14, 6))

g = sns.countplot(x="income_range", hue="gender", data=master_df)

plt.title('Distribution of income per gender')

plt.ylabel('Total')

plt.xlabel('Type of Member')

xlabels = ['low','low_to_mid','mid','mid_to_high','high']

g.set_xticklabels(xlabels)

plt.xticks(rotation = 0)

plt.legend(title='Gender')

plt.show();

master_df.groupby(['income_range','gender']).customer_id.count()income_range gender

high F 14980

M 9945

O 230

low F 327

M 953

O 62

low_to_mid F 17953

M 41775

O 726

mid F 26124

M 44576

O 1170

mid_to_high F 22168

M 21469

O 705

Name: customer_id, dtype: int64Females are the highest earners. Most men are low to mid and mid earners. There’s an almost equal distribution of male and females among the mid to high bracket

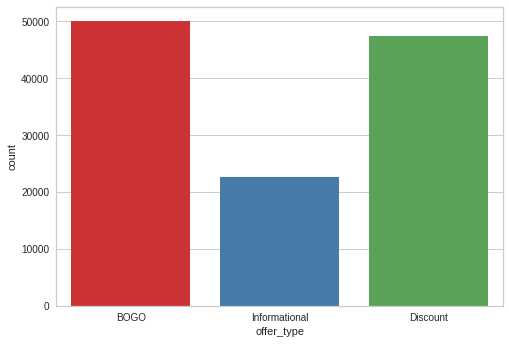

Distribution of Offer Type and Offer ID during experiment

sns.countplot(master_df['offer_type'])<AxesSubplot:xlabel='offer_type', ylabel='count'>

master_df['offer_type'].value_counts()BOGO 50077

Discount 47491

Informational 22660

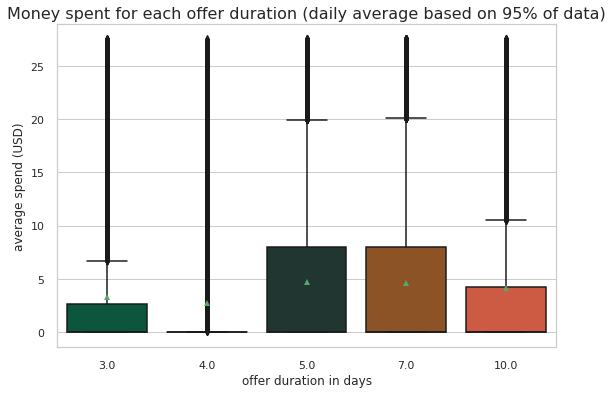

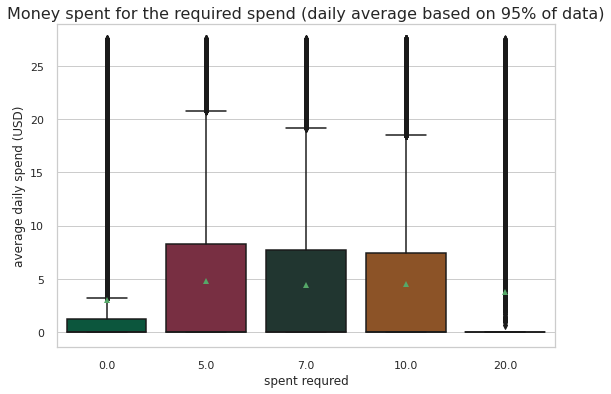

Name: offer_type, dtype: int64There are 3 types of offers presented to customers, BOGO, discount and informational. BOGO and discount were sent out more.BOGO was the most distributed offer, 25% of distributed offers were BOGO, 23% were Discount and 11.2% were Informational offers. There were 10 different offers from the 3 different offer types, there were 4 types of BOGO offers, 4 types of discount offers and 2 types of informational offers.

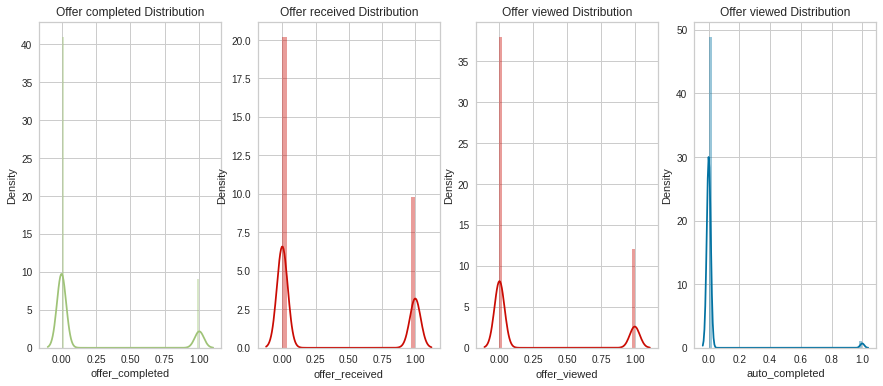

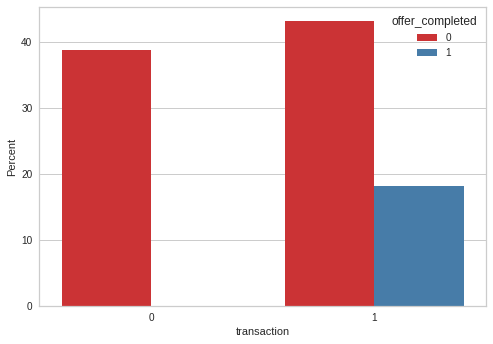

Distribution of offer received viewed and completed

f, axes = plt.subplots(ncols=4, figsize=(15, 6))

sns.distplot(master_df.offer_completed, kde=True, color="g", ax=axes[0]).set_title('Offer completed Distribution')

sns.distplot(master_df.offer_received, kde=True, color="r", ax=axes[1]).set_title('Offer received Distribution')

sns.distplot(master_df.offer_viewed, kde=True, color="r", ax=axes[2]).set_title('Offer viewed Distribution')

sns.distplot(master_df.auto_completed, kde=True, color="b", ax=axes[3]).set_title('Offer viewed Distribution')Text(0.5, 1.0, 'Offer viewed Distribution')

The distribution of offer data follows the same pattern. There were a lot of offers not completed vs those that were completed. The same with offers received, viewed and auto completed

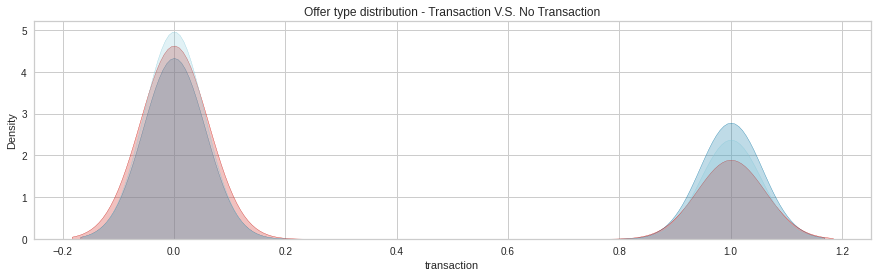

Distribution of Transactions for the offers

#KDEPlot: Kernel Density Estimate Plot

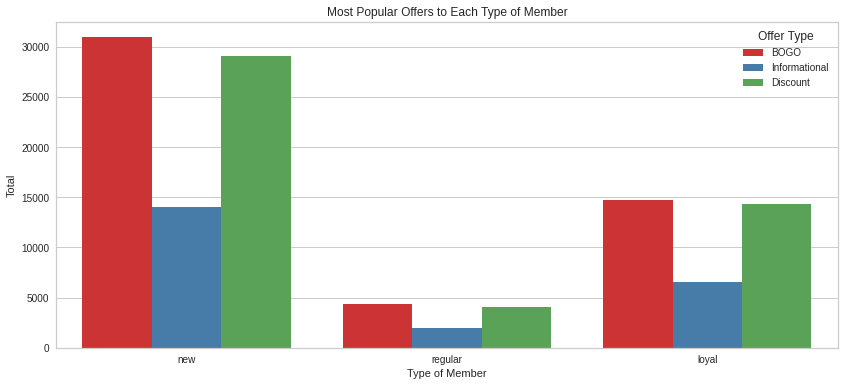

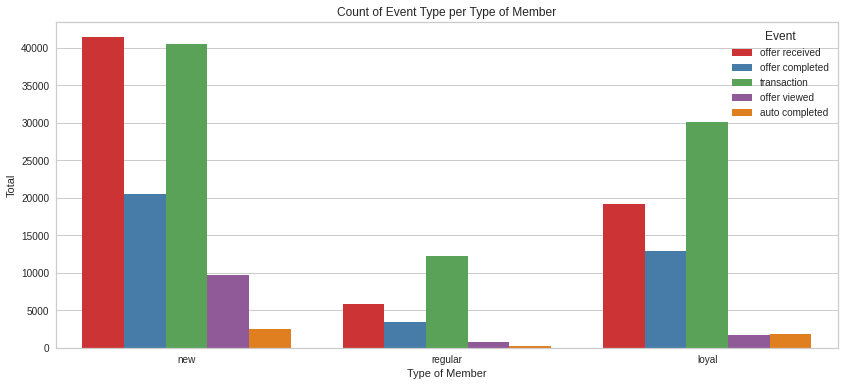

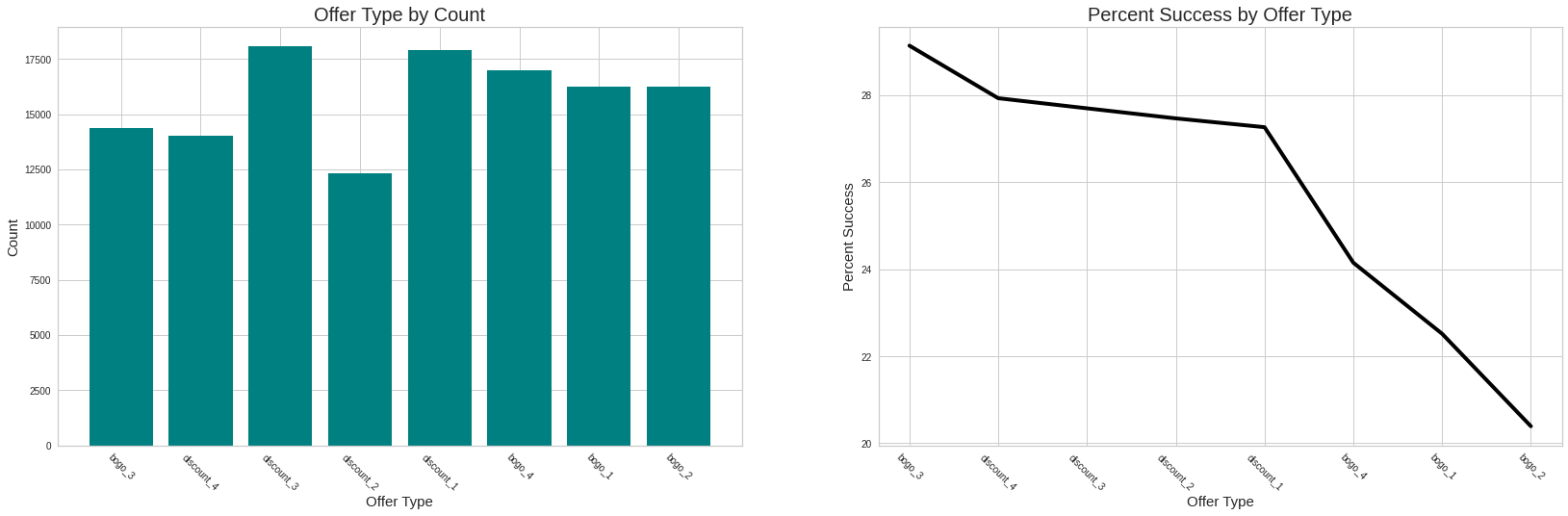

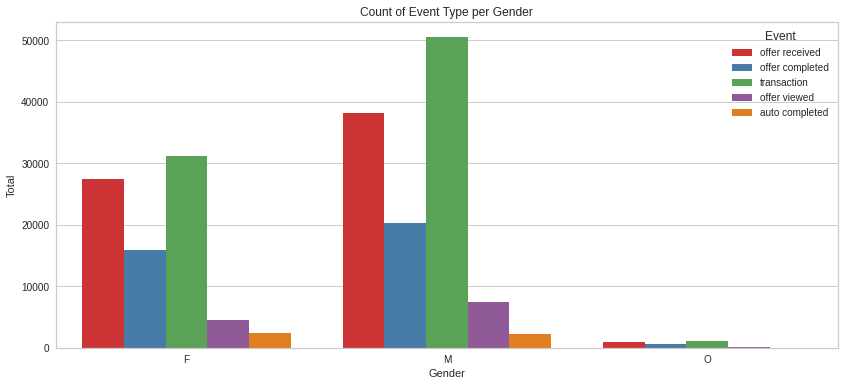

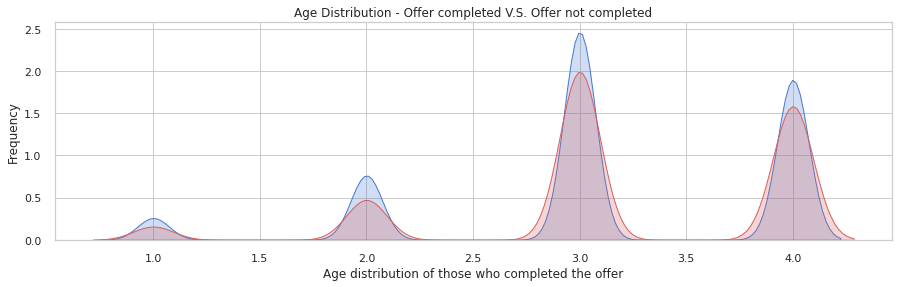

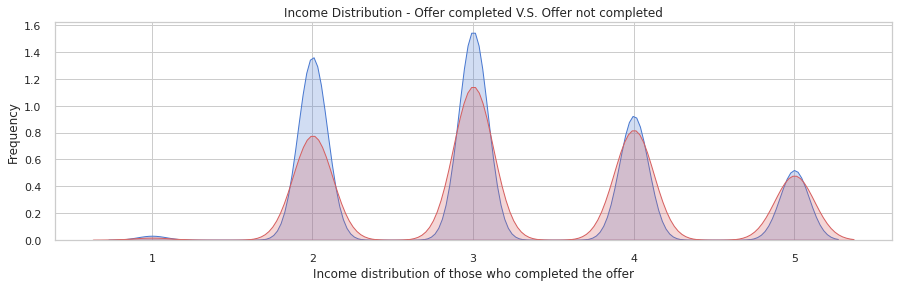

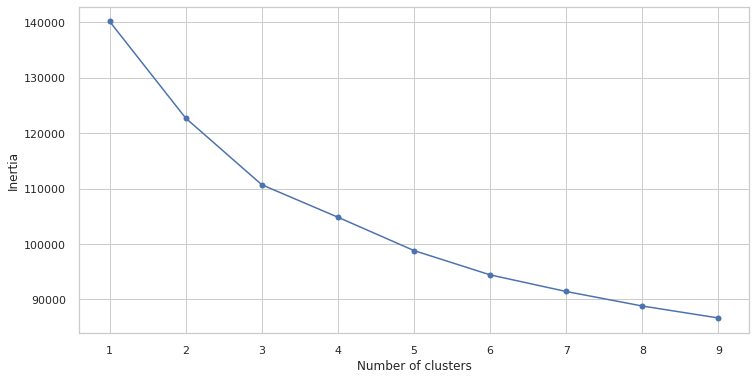

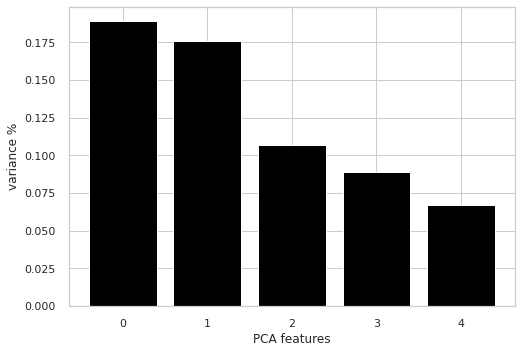

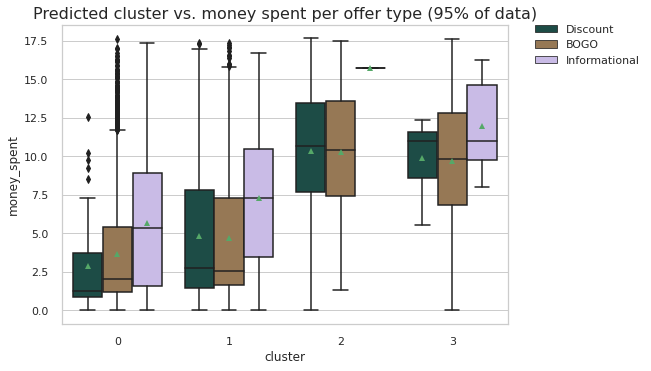

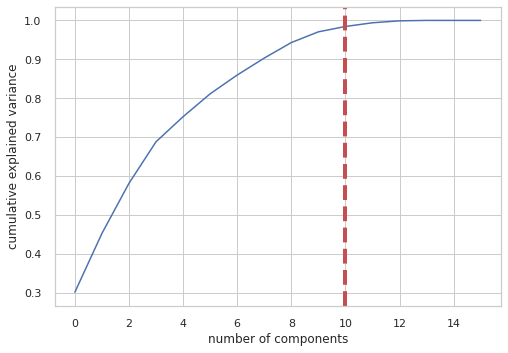

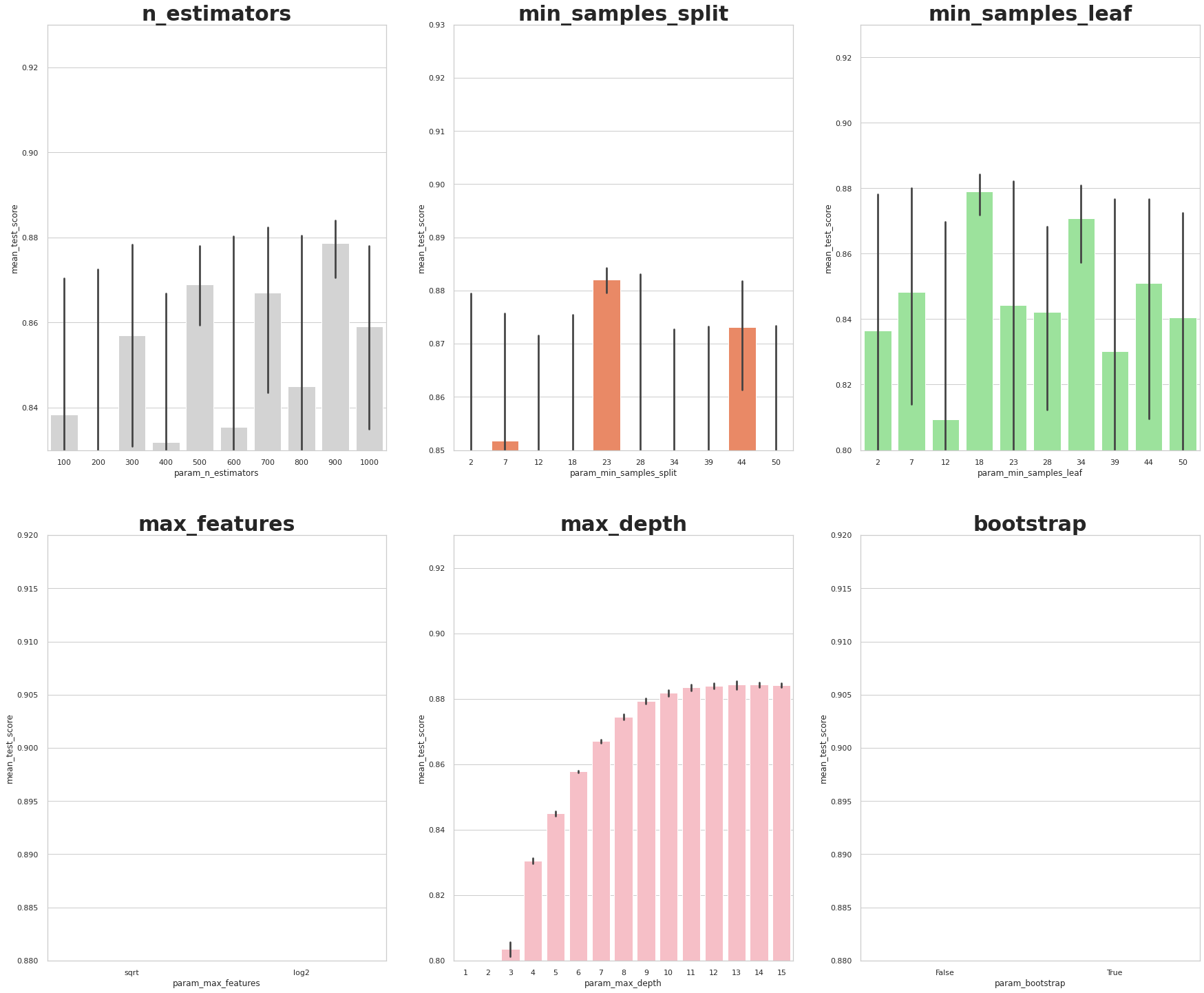

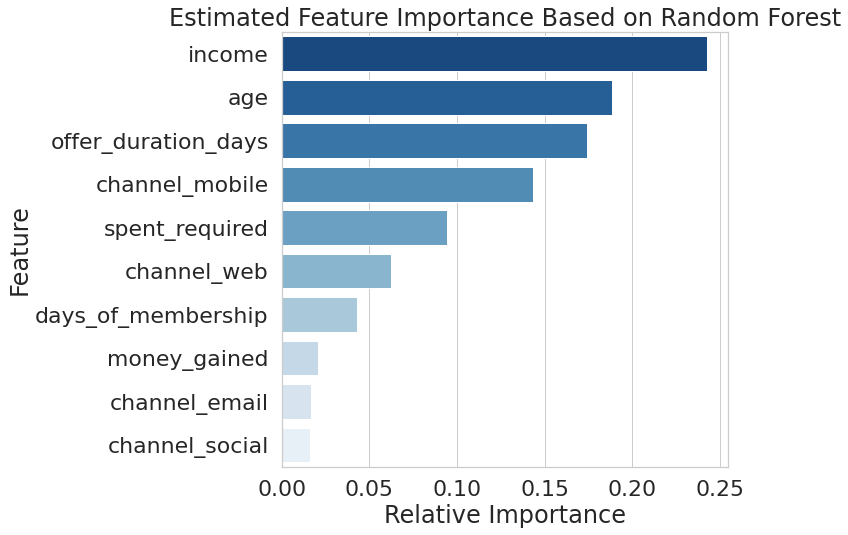

fig = plt.figure(figsize=(15,4))